Cherry (N.X.) Zhao’s research has taken her around the world, from Hong Kong to Harvard to Silicon Valley and the Adobe Research team. As a Research Scientist, Zhao focuses on computer graphics, machine learning, and human-computer interaction. Her mission is to create tools that help novice designers express their creativity.

We talked to Zhao about how she uses data to build new technologies, her path to Adobe Research, and what she loves most about her work.

How did you first get interested in graphics and human-computer interaction?

When I was getting ready to go for my PhD, I went to several graphics conferences. As soon as I saw their cool demos, I knew that was what I wanted to do. From there, I became really interested in figuring out how to make creative tools more efficient.

At that time, machine learning was becoming popular and deep learning was becoming more powerful, so I wanted to use those technologies to help people create graphic designs.

Of course, deep learning needs a lot of data from humans, and it needs to be high quality to train or customize models. So I also became interested in self-supervised learning, which is a way of utilizing data without having to label or annotate it. That’s how I ended up at the intersection of graphics, data, and human-computer interaction—the data is trying to analyze something, graphics is trying to create something, and the human-computer interaction piece connects it all to the people who use the tools.

Which technologies are you excited about right now, and how are they impacting your work at Adobe Research?

One of the things I worked on for my PhD was data-driven graphic design, which means using machine learning and data to help people do graphic design for things like posters, websites, and slides. By utilizing limited annotated data, we created several algorithms to automatically generate the design by recommending customized elements, such as fonts, colors, images, and layouts. In this way, users can create a “cute poster” or a “romantic story” easily.

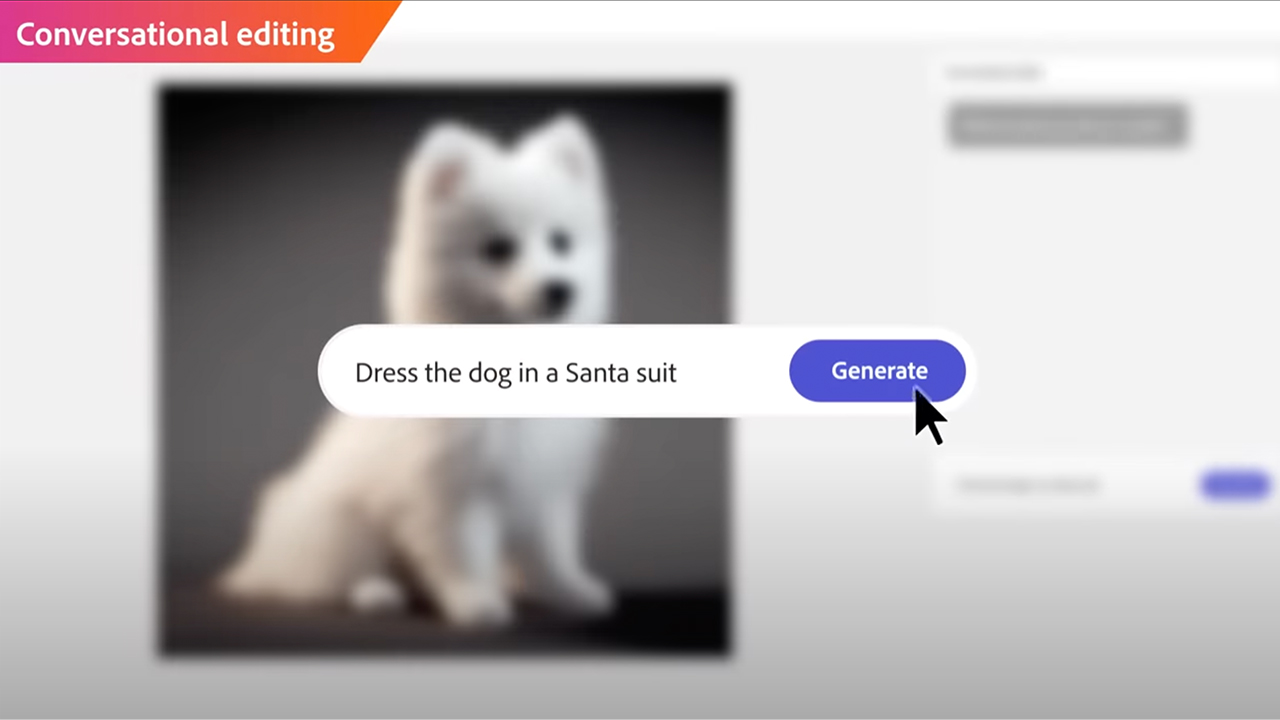

Now that I’m at Adobe, with more resources, I’m getting to work on more broad-vision topics covering diverse modalities, including images, vector graphics, and 3D shapes. Rather than only focusing on how to generate them, I’m studying how to edit these modalities based on a user’s requirements so I can help more people express their ideas. This work will allow people to personalize their results easily with simple interactions, like text instructions, sketches, and reference examples.

How did you decide to join Adobe Research? How do you hope your research will help Adobe users?

When I was working on my PhD, I read a lot of papers from Adobe because their work was related to my research. During my year at Harvard, I also collaborated with Zoya Bylinskii, a former Research Scientist at Adobe Research, on a paper about helping people create complex icons with a sentence and a single click.

Then, during the pandemic, I was back in Hong Kong. But I always knew I wanted to come to Adobe Research and work with this group of researchers.

I’ve been with Adobe Research now for about a year, and I’m really excited about using technology to help people communicate more effectively. That’s something I plan to keep working on through all of my research journey.

How would you describe the culture at Adobe Research?

It’s such a supportive environment, with lots of freedom and so many people to learn from. You can express your ideas, explore, and find collaborators with similar interests. And if you have questions, you can ask anyone for help, no matter what level the person is in the organization.

Where do you hope technology will take us in the next ten years?

With generative AI techniques, I think communication will keep getting better and better. You’ll be able to create anything you want. And, personally, I hope we’ll have robots that can cook for us!

Wondering what else is happening inside Adobe Research? Check out our latest news here.