In her work, Research Scientist Shunan Guo transforms complex data into meaningful visualizations and communicates insights from those models. She and several Adobe research colleagues, interns, and university collaborators were recently awarded Best Paper Honorable Mentions at IEEE VIS 2022 for a project that uses augmented reality (AR) to support decision making during in-store shopping and for research into visualizing missing data for scatterplots.

We talked to Guo about some of the most interesting challenges in data visualization and visual analytics, what it was like to start at Adobe as an intern, and how data may come together in the metaverse.

How did you first get interested in information visualization and visual analytics?

My PhD supervisor was working on a collaborative project with a hospital. They wanted to add interactive, user-centered intelligence to their system so they could see more than just a patient’s background. I helped solve that problem using data visualization and visual analytics.

The challenge is that patients’ clinical records are a sequence of medical events. For example, maybe today you had a doctor’s visit, and last week you had a blood test. This data is very complex because of the number of events and the number of patients. We wanted to summarize the patterns and make predictions.

As it turns out, this work is aligned with how Adobe approaches data and customer journeys, so that’s how I came at Adobe for a summer internship.

Can you tell us what your internship was like, and what you worked on?

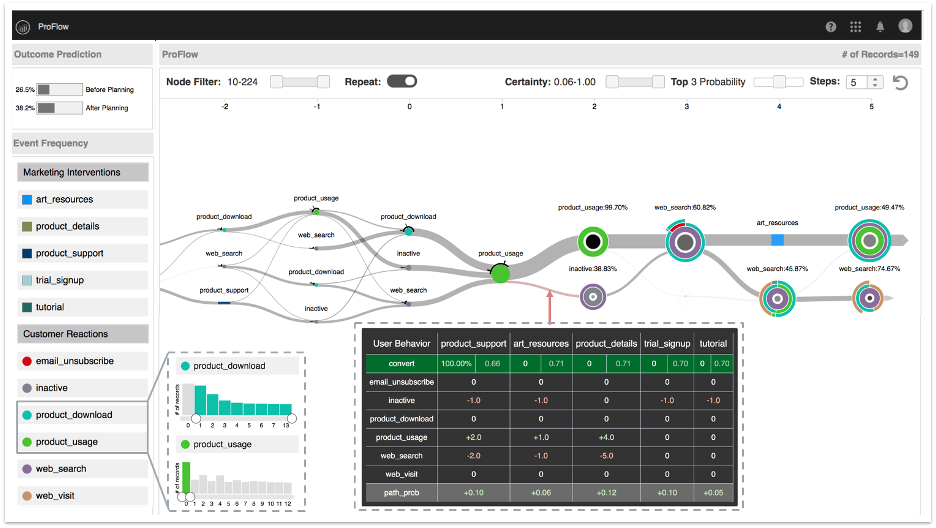

I had an extended six-month internship. My mentor and I created a project about predicting customer behavior and suggesting actions. For example, a marketer may want to understand a customer’s historical behavior and see potential future behavior to plan the next action, such as the best type of email to send. We created a prototype and had two related papers published in ACM CHI 2019. We also shared the project, called Journey Genius, during the Adobe Summit Sneaks in 2019.

It was a lucky time to be an intern — it was 2018, and all the interns were working in the same building with the full-time researchers. My mentor and I got to discuss our research often. It’s how I got to know what it’s like to do research at a company, and to see how my work fit with Adobe’s applications.

I was on the fence about choosing academia or industry, but this experience helped me decide to join Adobe after school. I saw that there were lots of opportunities to collaborate with researchers from other areas. And I liked being able to test out whether my research is practical, applicable, and valuable to customers.

You recently worked on an augmented reality (AR) shopping project. What can you tell us about that?

I worked with some of my Adobe Research colleagues, along with one of my talented interns, to create an immersive shopping experience that helps when you’re struggling to choose which product to buy. You might be standing in front of a bunch of items and they all look similar to you. So you may look at the labels or check online customer reviews on your phone. But wouldn’t it be nice to have the comparison happen in an immersive environment?

With our project, you can just use your phone’s camera to capture a label. From there, we can pull out information about the product and create visualization charts for comparing products. So, say you’re shopping for protein bars, you could have a visualization of protein, carbs, or other factors you could choose.

Of course, this application depends on the data that’s available, and what you can retrieve online. But the action of connecting physical shopping to the digital world opens an opportunity to provide more advanced shopping experiences to in-store customers, such as real-time product recommendations and discount offerings. This research isn’t part of a product yet, but it earned a Best Paper Honorable Mention at IEEE VIS 2022 and it was included in an Adobe press release about technologies that will power the future of the metaverse.

What do you think about the metaverse, and how does your AR shopping project fit in?

I think the metaverse has two main aspects. The first is that it’s trying to create a common virtual space where people can interact with each other.

The second part is trying to accomplish the kinds of things you do in the real world — like shopping — in a virtual space. For that you need to have data. Then you can do more — you can identify a person’s persona and develop applications to provide personalized virtual experiences.

What else are you working on now?

We know that users want insights from their dashboards, so most of my research focus now is on automating that, including recommending visualizations, generating reports that summarize insights, and creating captions that describe insights to help you tell a story from data.

I’m also working on a project on the creative design side. When a marketer wants to send an email to customers, we recommend styles based on past email designs. If the user changes something, say the text color, we can change other parts of the design to match that style.

What insight would you share with someone who’s interested in joining your team at Adobe Research? Adobe Research is a great place to make your research both innovative and applicable. You are allowed to boldly explore research directions that interest you, and you will have even more fun if you’re equally passionate about having your research integrated into the product. It’s something I really enjoy because I like to see my research contribute to products people use every day.

Interested in working with our team of research scientists and engineers? Learn more about careers with Adobe Research!