Videos with spatial audio are rapidly proliferating due to advances in mobile phone mics and AR/VR technology. A particularly trending type of video with spatial audio is autonomous sensory meridian response (ASMR) videos. In these videos, “ASMRtists” often move softly sounding objects in front of a video camera with an accompanying spatial microphone. The resulting videos often relax viewers or give them a “tingling” sensation.

We ask:

Can we leverage this new trove of video with spatial audio to teach machines to match the location of what they see with what they hear?

Moreover, unlike most traditional machine learning datasets, these videos aren’t labelled at all:

Can we teach machines from video data without any labels?

Consider the following video. Please wear wired headphones to appreciate the spatial (surround) sound effect:

Did you notice that your spatial perception of the speaker moving from left to right was consistent between the visual and audio streams? Now consider this video:

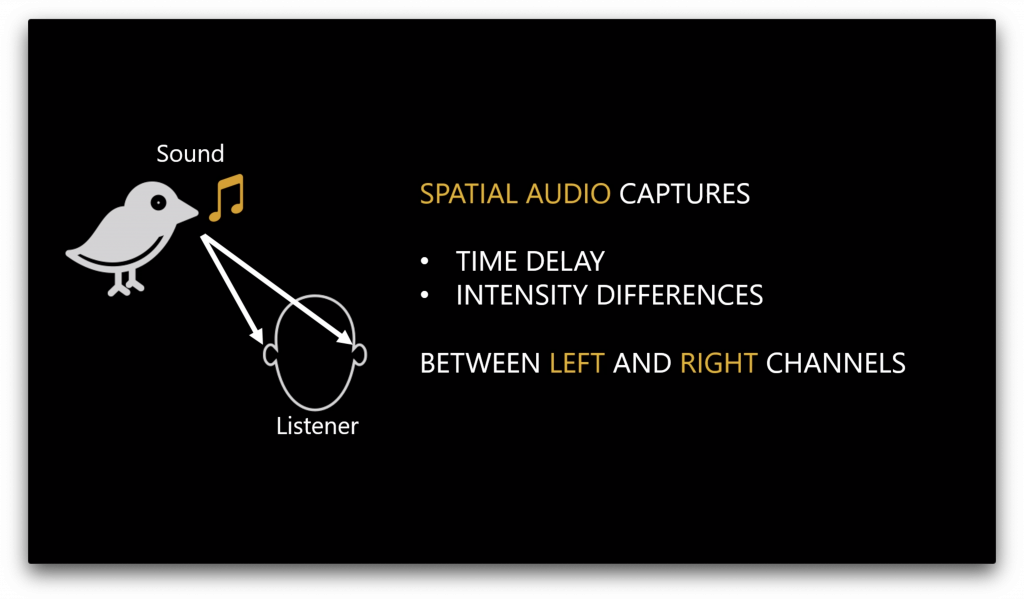

Did you notice that there is an obvious discrepancy between what you saw and heard? Here we have flipped the left and right audio channels, so the direction of the speaker’s voice is not consistent with where they are located on the screen. This effect is due to the spatial audio signal in these two videos: the audio emulates our real-world auditory experience by using separate left and right (i.e., stereo) channels to deliver binaural cues influencing spatial perception.

Because we, as humans, have the ability to establish spatial correspondences between our visual and auditory senses, we can immediately notice that the visual and audio streams are consistent in the first video and flipped in the second video. Our ability to link the location of what we see with what we hear enables us to interpret and navigate the world more effectively (e.g., a loud clatter draws our visual attention telling us where to look; when interacting with a group of people, we leverage spatial cues to help us disambiguate different speakers). In turn, understanding audio-visual spatial correspondence could enable machines to interact more seamlessly with the real world, improving performance on audio-visual tasks such as video understanding and robot navigation.

In our work, “Telling left from right: Learning spatial correspondence between sight and sound” (authors Karren Yang, Bryan Russell and Justin Salamon)–which is being presented this week as an oral presentation at the Conference on Computer Vision and Pattern Recognition (CVPR) 2020, and as an invited talk at the CVPR 2020 Sight and Sound workshop–we present a novel approach for teaching computers spatial correspondence of sight and sound.

In order to teach our system, we train it to tell whether the spatial audio (i.e., left and right audio channels) have been flipped or not. This surprisingly simple task results in a strong audiovisual representation that can be used in a variety of downstream applications. Our approach is “self supervised,” which means that it does not rely on manual annotations. Instead, the structure of the data itself provides supervision to the algorithm, meaning that all we need to train it is a large dataset of videos with spatial audio. Our approach goes beyond previous approaches as it learns cues directly from stereo audio in order to match the perceived localization of a sound with its position in the video.

In addition to our novel self-supervised approach, as a second contribution of our work, we introduce a large dataset of thousands of ASMR videos curated from YouTube: The YouTube-ASMR-300K dataset:

Let’s explore some of the applications enabled by our learned audiovisual representation.

First, we demonstrate localization of sounding objects in a video with our learned representation. In this example, using the input audio only, our system can automatically localize the speaker in the video:

As another example, we demonstrate automatic conversion of mono (single-channel) audio to stereo audio, which is known as “upmixing,” with our learned representation. Please watch the following video with wired headphones to appreciate the spatial audio in this video:

Finally, we also extend our approach to 360 degree videos with ambisonic spatial audio. Here, we show localization of sounding objects using the input spatial audio only with our learned representation:

We are excited about these results and believe that they could yield strong representations for other downstream tasks, such as audiovisual search and retrieval and video editing for creatives.

For more details about our approach and to view the system demo, please see our project webpage and paper.

Contributors:

Justin Salamon, Bryan Russell, Adobe Research

Karren Yang, MIT

*The GIF above includes the following CC-BY licensed videos: