John Collomosse, Principal Research Scientist at Adobe Research, draws on computer vision, machine learning, and blockchain to create tools that build trust for digital content—helping verify if the images we see online are what they claim to be.

His work on content authenticity includes collaborations across Adobe, the academic world, and industry. It’s part of a larger effort that Adobe has pursued with its Content Authenticity Initiative, a group that has over 1,000 members including camera manufacturers, media outlets, and software companies. UK-based Collomosse, who is also a professor at the University of Surrey, sat down with us to talk about his work on Content Credentials, image fingerprinting, and more.

What is the Content Authenticity Initiative?

The Content Authenticity Initiative (CAI), led by Adobe, develops open standards and tools to inform users about the provenance of media. Provenance tells us about origins of an image—where it came from and what was done to it along the creative supply chain, from the creation to the consumption of that asset.

There are a couple of important use cases for content authenticity. First, to fight fake news and misinformation. We want to enable users to make better trust decisions about the assets they encounter online. And another is helping creators get better attribution for their work.

My team and I work with Andy Parsons, Senior Director of the CAI, and many Adobe colleagues on content authenticity. We enjoy collaborating with his team—they work not only on technical matters, but also on advancing education and driving engagement and adoption of the CAI.

How did you become interested in content authenticity research?

Before joining Adobe, I was involved in a project called ARCHANGEL, which we ran with the National Archives in a number of countries, not just in the UK, but also in US, Norway, Estonia, and Australia. We were building technologies to help tamper-proof and prove the provenance of digital media within those National Archives, and we were using a combination of computer vision and blockchain technology to do that. This led to founding DECaDE, an academic research center exploring provenance topics.

When I came to Adobe Research, I was excited to speak with like-minded people at Adobe about the role of provenance in the content authenticity initiative. Deep fakes were in the public spotlight for the first time, and the research trend was to address authenticity by detecting manipulated content. But not all manipulated media is misinformation. Most people use digital tools for good, and most misinformation is actually created by re-using original assets out of context. So rather than trying to detect manipulation, which doesn’t solve the problem, Adobe—with the work of the CAI—takes the approach of improving user awareness of the provenance of imagery. At Adobe Research, several of us have provided input to early technical advisory discussions, and we continue to give input to an open technical standard to permanently embed a secure provenance trail into assets. The CAI needed, and still needs, new technologies to be invented to make this vision possible. A good example of that is our content fingerprinting work at Adobe Research.

Could you tell us more about content fingerprinting?

The way the CAI works is by embedding a provenance trail in the metadata of assets, like images. This shows a history of who created the image, and which tools and assets they used. Ideally this information persists in the metadata from publication to consumption—enabling users to make more informed trust decisions about images they discover online.

One of the challenges is that the metadata can be stripped away from the asset, inadvertently or maliciously. So if you post an image on social media, for example, the metadata will usually be stripped off by the platform. Attackers may also strip off the metadata in an attempt to misattribute the content.

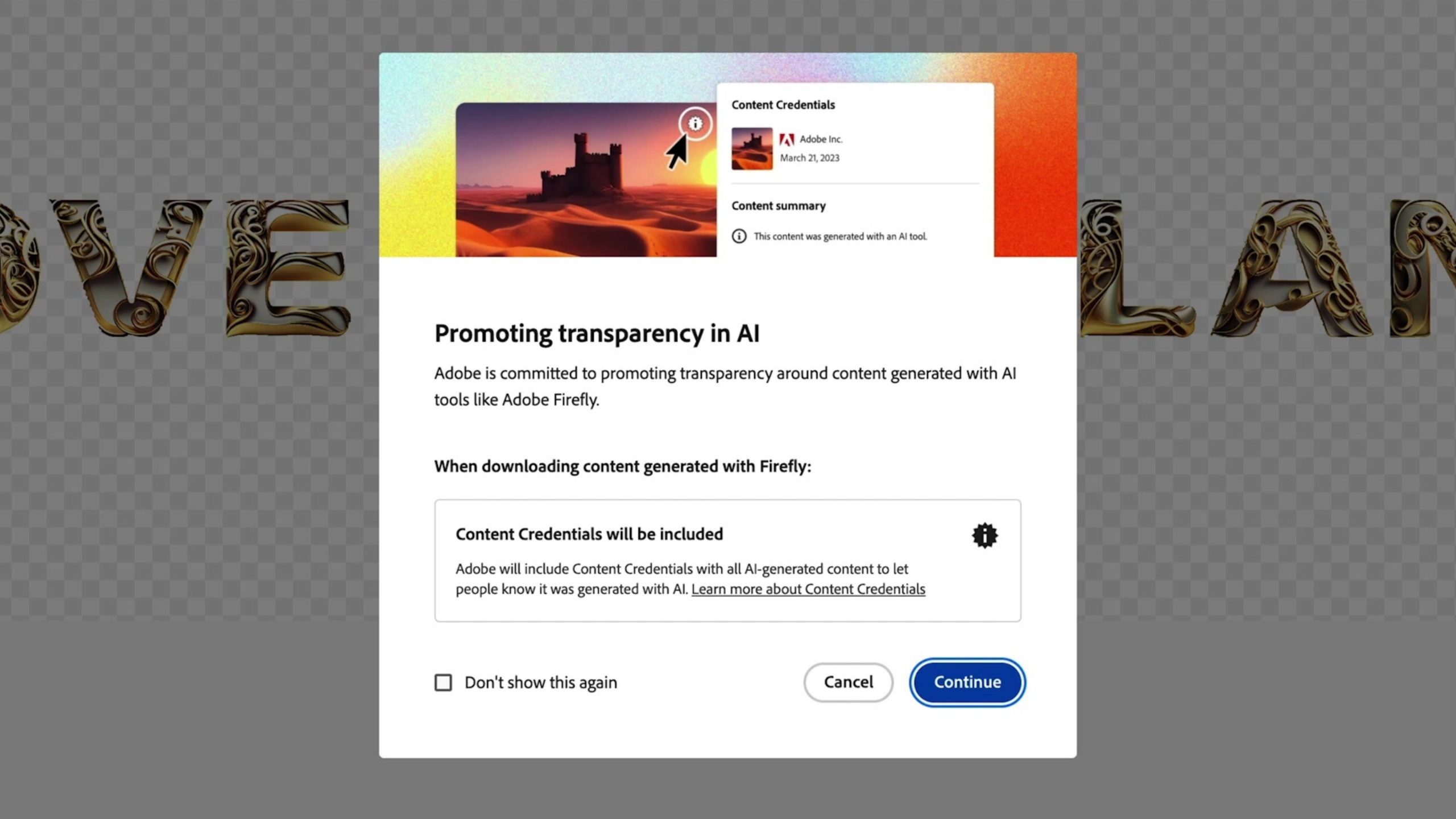

This is where content fingerprinting comes in—which we shipped at MAX 2022 in Photoshop Beta as part of the Content Credentials release. If there’s an image that’s out there that has gone through our pipeline but has had that metadata stripped away, we can fingerprint that image based on its pixels and match it back to Adobe’s cloud, where an authoritative copy of that provenance information has been stored at the time it was signed. Then we can match it and display that provenance information. The content credentials ‘stick to’ the image, no matter which platforms or tool chains it passes through. It’s an opt-in process to use the system. You can try it out on the CAI Verify website.

This technology has also been adopted by the Adobe Stock moderation team, where it’s used to spot fraudulent activity. Also, Adobe recently launched Firefly, a generative AI model, and as part of our responsible approach to innovation, all Firefly art is tagged with Content Credentials. These can be recovered using fingerprinting even if the metadata is stripped away.

Could you tell us more about how your team developed this technology?

It has been an innovation arc for more than two years, starting with an Adobe Research summer internship sponsored by the CAI. I mentored that intern, Eric Nguyen, who developed a new object-centric fingerprinting method, which was published at ICCV 2021. Another intern moved this further in a CVPR 2022 paper, and several others explored new tools and datasets. Many other Research scientists and engineers worked on these core technologies and on scaling them to production.

Notably, Research Scientist Simon Jenni worked on image fingerprinting technology for the CAI, developing a model that verifies the retrieval results. This verification happens at the last stage of the pipeline, to suppress false positives. We are pursuing other modalities now too. Simon is pursuing research on fingerprinting technologies for video, and we have collaborated with Nick Bryan on audio.

What are you hoping to see next?

Another development for the CAI is that some new models of cameras are now including a feature to sign metadata into imagery when you take a photo. We saw that announced at Adobe MAX 2022 with Adobe’s Leica and Nikon partnerships, and it is such an exciting signal that CAI adoption is gathering pace.

With all this discussion of images, I want to point out that the story doesn’t stop there. We hope to bring content credentials to video and other applications. In the future of creativity, with new generative AI technologies, the issue of image provenance is even more timely, and researchers at Adobe are already developing new tools to define provenance for synthetic media, and on other projects such a watermarking and summarizing manipulations present in images using AI generated text.

Interested in learning more about our team of research scientists and engineers, and our work on innovative research fields? Check out our latest news here!