Imagine scrolling through social media videos while on a crowded train, in a quiet office, or with a baby sleeping on your shoulder. Like many social media users, you likely have your sound turned off. This emerging silent watching behavior is driving the increased use of captions—overlayed text that transcribes what’s being said in the video. Digital marketers and content creators want to reach these quiet audiences, and to do so, they are turning to captions as a powerful tool to make online video content more accessible and engaging.

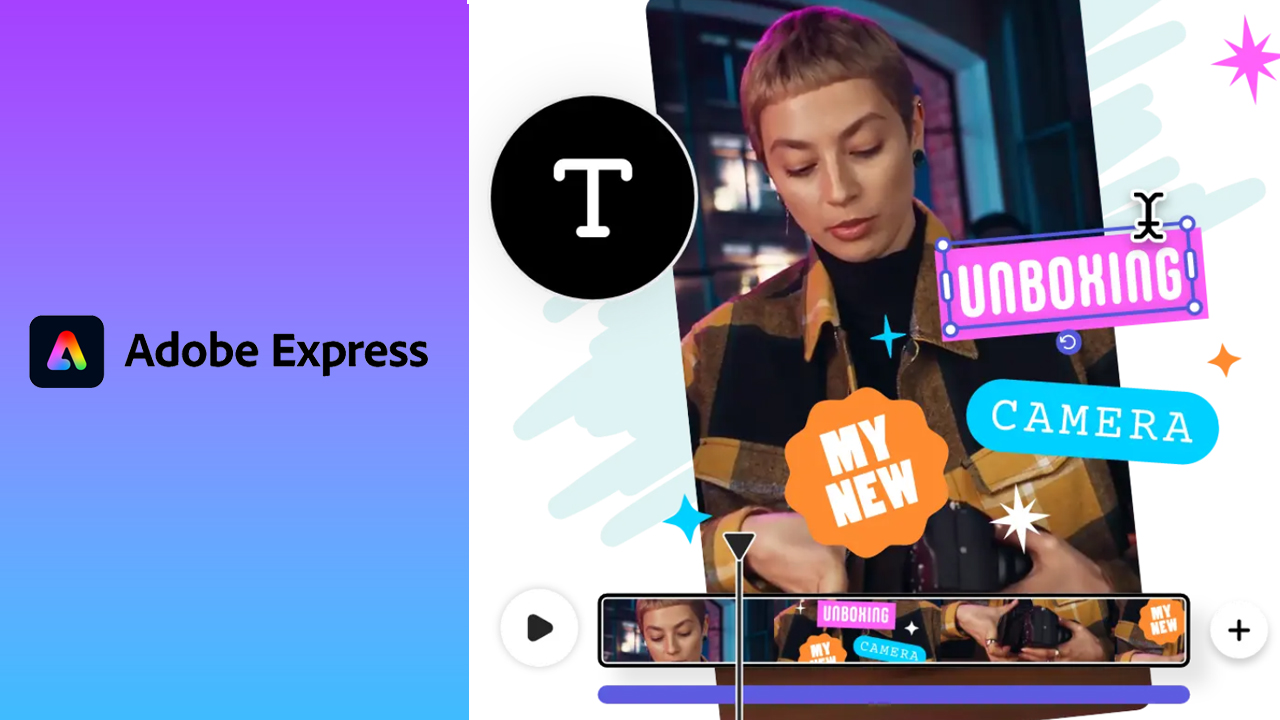

It is then easy to understand why video captioning is currently the top request on UserVoice, a forum for feature suggestions, for Adobe Express—and why Adobe Research has been partnering with the Adobe Digital Video and Audio team to deliver this capability to customers with the new Caption Video Quick Action for Express. Caption Video is an AI-powered captioning web tool that allows users to automatically add stylized, animated captions to their videos and either download the result or continue editing in Express.

To try this feature, check out the quick action and its Adobe HelpX page. It is currently free to use and supports multiple languages.

When a user uploads a video onto the Quick Action interface, Caption Video does the captioning magic using a combination of AI and manually curated solutions. The customer experience is akin to having a skillful designer in the background completing a plethora of tasks, including transcribing the video sound into words, selecting the right font size and color for the text, and then meticulously placing the caption text on every frame in the video at an optimal position.

One particularly challenging problem that Caption Video solves for customers is the placement of captions in a face-aware manner. In early user testing, we discovered that many video creators do not like having captions placed on top of someone’s face in the video. Imagine a “selfie style” video where a speaker’s face might show up at any region in the video frame. Having captions at a fixed position might not be ideal as it might accidentally block the speaker’s face. At the same time, moving the captions around constantly to avoid the face is also not desirable, as the layout can look jittery when the faces move frequently. Caption Video employs an algorithmic solution that balances face avoidance and readability. It analyzes the video and dynamically adjusts caption placement via a combination of continuous optimization and discrete templates, saving users hours of manual effort to achieve the same result.

Looking ahead, we see many opportunities for further research on intelligent, content-aware text effects. For example, we’ve investigated automatic lyric video generation with collaborators from Stanford University and body-driven text with collaborators from George Mason University. These projects demonstrate how we can streamline the creation of expressive, animated onscreen text by analyzing the audio and visual content of source footage. We are excited to continue exploring this topic and invite you to try Caption Video and share your experiences with us.