Contributors: Ruhul Arora, Rubaiat Habib, Danny Kaufman, Wilmot Li, Karan Singh

Hand gestures are a ubiquitous tool for human-to-human communication. They are a physical expression of mental concepts that augment our communication capabilities beyond speech. Mid-air gestures allow users to form hand shapes they would naturally use when interacting with real-world objects, thereby exploiting users’ knowledge and experience with physical devices. Recent advances in hand tracking technology (including hardware such as the Oculus Quest and Microsoft Hololens) open up exciting new interaction possibilities to interact with computers in natural ways.

Adobe Research has a history of enabling natural interfaces to create dynamic media through acting and performances, including the design and development of Emmy award winning Character Animator. In this spirit, our research team, including Rubaiat Habib, Wilmot Li, Danny Kaufman (in collaboration with Rahul Arora and Karan Singh from University of Toronto), is now exploring a key question: How will this new form of (gestural) interaction change the way we design and create dynamic content in immersive (AR/VR) environments?

VR Animation

Our work investigates the application of mid-air gestures to the emerging domain of VR animation, a compelling new medium whose immersive nature provides a natural setting for gesture-based authoring. Current VR animation tools are dominated by controller-based direct manipulation techniques that do not fully leverage the breadth of interaction capabilities and expressiveness afforded by hand gestures. How can gestural interactions specify and control various spatio-temporal properties of dynamic, physical phenomena in VR?

Gesture Elicitation Study

Despite decades of research on gesture-based interactions in HCI, we know very little about the role and mapping of mid-air gestures to complex design tasks like 3D animations. To gain a deeper understanding about user preferences for a spatiotemporal, bare-handed interaction system in VR, we first performed a gesture elicitation study. Specifically, we focused on creating and editing dynamic, physical phenomena (e.g., particle systems, deformations, coupling), where the mapping from gestures to animation is ambiguous and indirect.

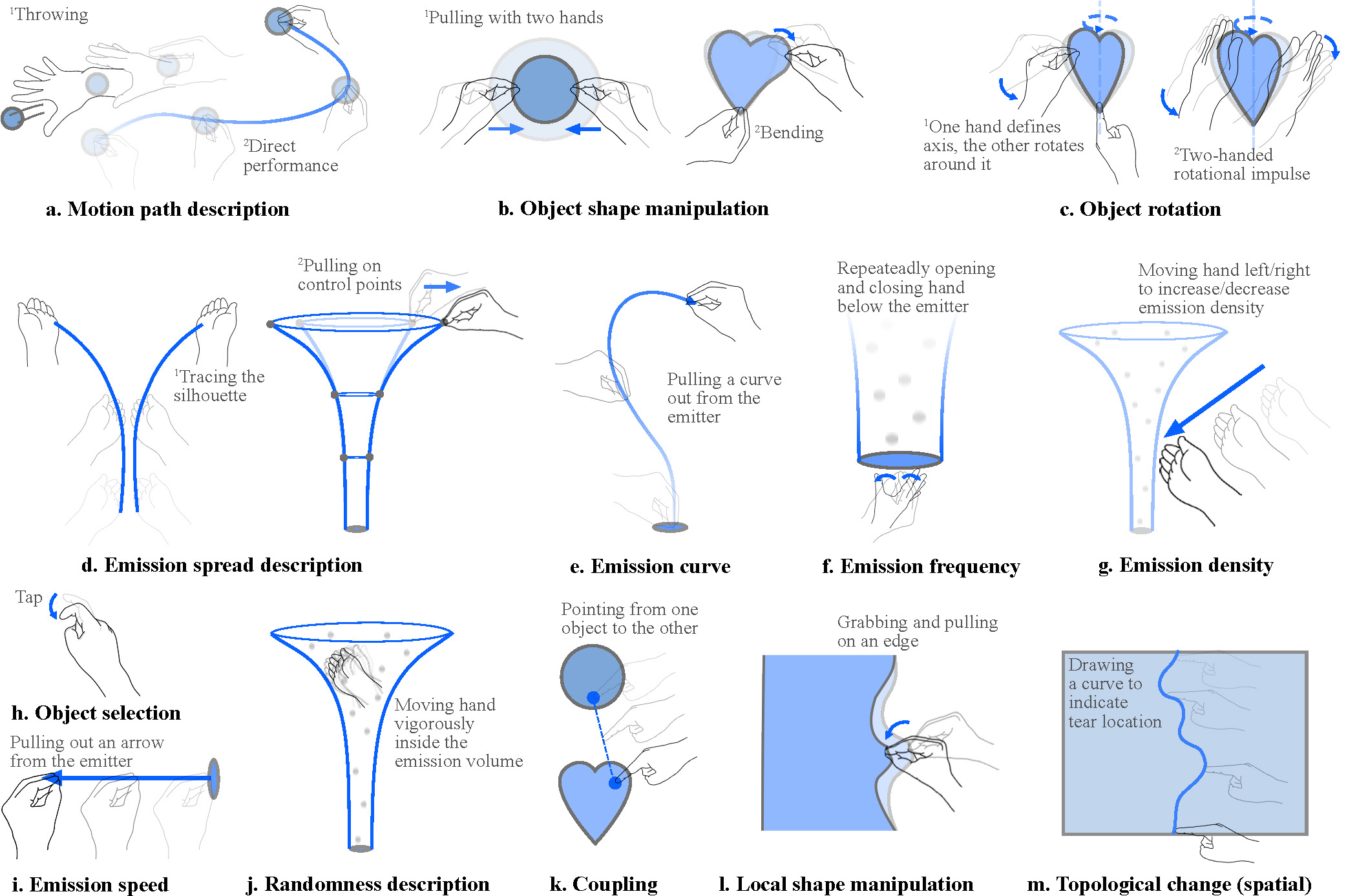

The resulting gestures from the study demonstrate the breadth and richness of mid-air interaction techniques to control dynamic phenomena, from direction manipulation to abstract demonstrations. For instance, users often employed a single gesture to specify a number of parameters simultaneously

Most commonly observed gestures (dominant gestures for commands which had at least 10 occurrences in our study). These gestures include direct manipulation (a–c) and abstract demonstrations (d, f, g, i, j). Many involve interactions with physics (a, c), simultaneously specifying multiple parameters, such as speed and emission cone (d), and coordinating multiple parallel tasks using bi-manual interactions, where the non-dominant hand specifies the rotation axis, and the dominant hand records the motion (d)

Design Guidelines

Based on our findings, we propose several design guidelines for future, gesture-based, VR animation authoring tools. These include suggestions for the role of physical simulation in VR, granularity of gesture recognition capabilities, and contextual interaction bandwidth. See the paper for more details.

A Gesture-Based Tool for 3D Animation

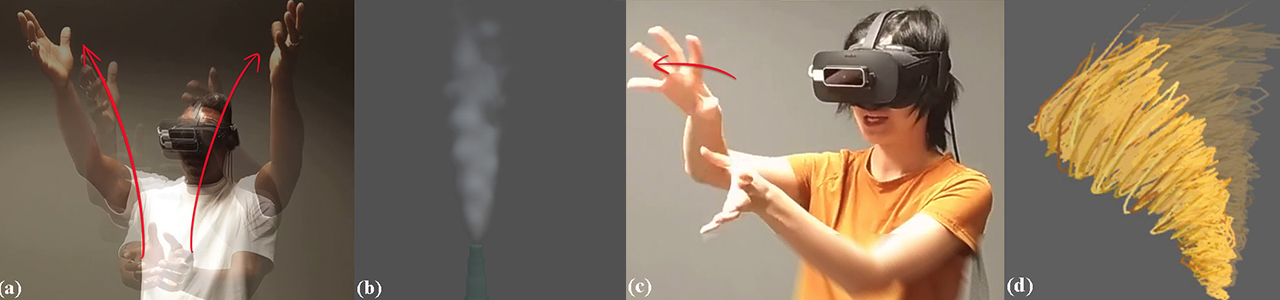

As a proof of concept, we implemented an experimental system consisting of 11 of our most commonly observed interaction techniques. Our implementation leverages hand pose information to perform direct manipulation and abstract demonstrations for animating in VR. See the following video to see these interactions, as well as several compelling 3D animations created by novices using our system.

Looking Forward

We put this work forward to start a conversation around the role of mid-air hand gestures for 3D design and animation. This work was presented at ACM UIST 2019. We hope our design guidelines inspire system builders to develop novel gesture-based design and animation tools and that our experimental system can help in these future efforts. The design of novel user interfaces that combine mid-air gestures with traditional widgets is an exciting future work direction that would further expand the scope of gestural interactions for content creation. Visit this external project page for more information.