If you want to create a 3D graphics asset—whether you’re envisioning a dress or a sports car or a weathered wooden bench—you’ll likely need to use a digital material to render the textures, relief, colors, sheen, and other visual properties of your object. But digital materials are notoriously difficult to acquire, create, and edit. That’s where Adobe Researchers come in. They’ve developed cutting-edge technologies, including Image-to-Material and Text-to-Material, that allow users to instantly transform a single photo or text prompt into a rich, versatile digital material.

“This is a really important capability for creative professionals, especially in areas such as the entertainment industry and product design,” explains Tamy Boubekeur, an Adobe Research Lab Director and Senior Principal Research Scientist whose team works on digital materials. “When you watch an animated movie or play a video game, for example, every pixel you see on screen is the response of a digital material to lighting conditions.”

Capturing digital materials from a single photo

In our day-to-day lives, we rarely think about how visually complex the materials we see really are. When you look at a red sports car, not every single point you see on the car is the same red, or even red at all. The reflection of light from the surrounding environment makes each point a different color. Digital materials need to model these nuances.

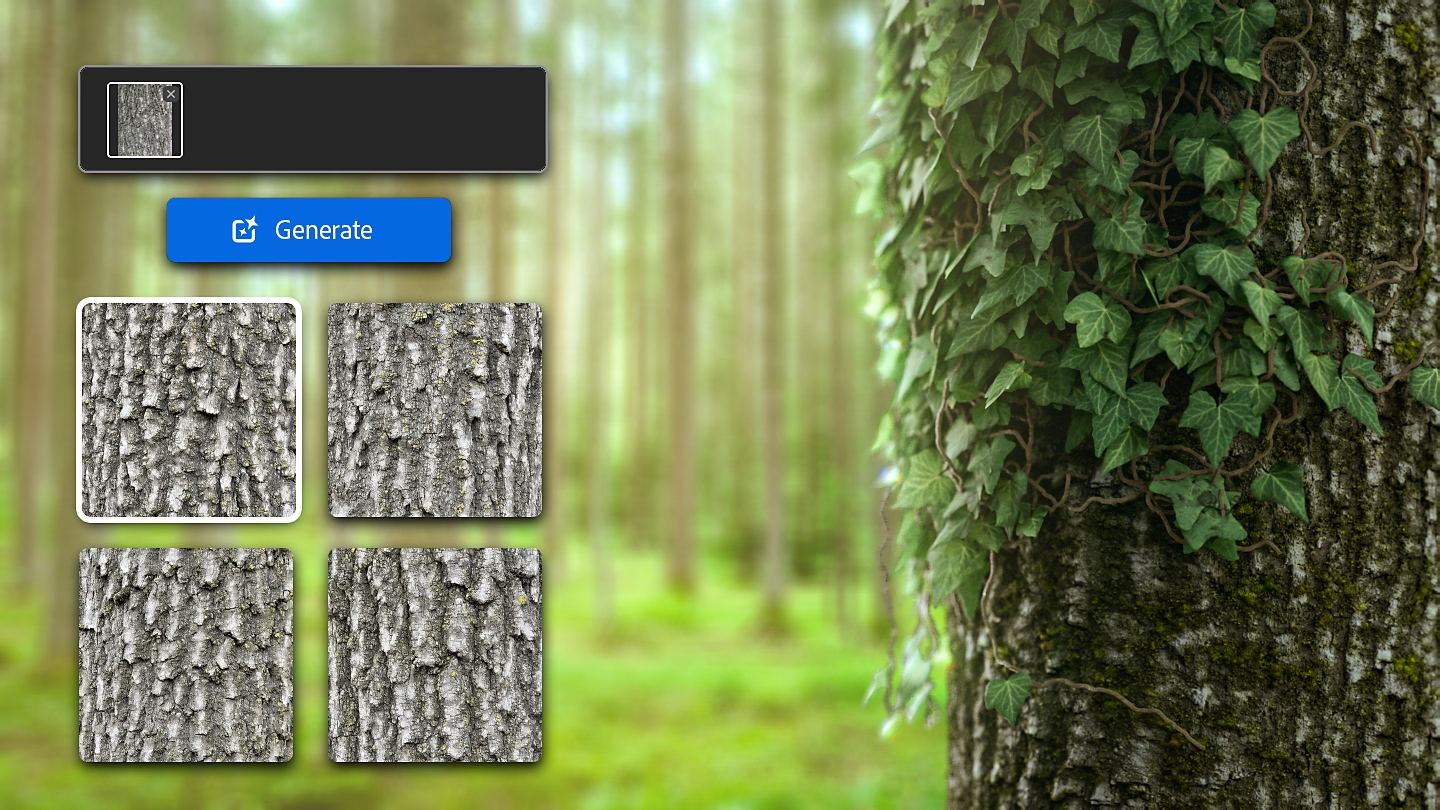

“To some extent, digital materials are view- and light-dependent colors, and creating them presents a lot of interesting challenges,” says Boubekeur. Over the years, researchers have developed machine learning techniques to take on the work of creating and applying digital materials. Most recently, Adobe Researchers developed the Image-to-Material feature for capturing a digital material from a user’s own photo.

The first challenge to building Image-to-Material was gathering enough data to train the machine learning models. To do it, the Adobe Research team tapped in-house artists. Boubekeur describes the process: “Instead of creating models of a single material, our artists developed procedural models that allow you to change parameters to create an infinite number of variations. With these models, we were able to generate millions of examples of materials over a large diversity of appearances and use cases—a gigantic data set that never existed in the real world, but that is very, very close to what we see in the world.”

In addition to building the data set, the team had to figure out how to handle the ambiguities that arise when attempting to estimate material from photographs—which is more complicated than it looks. “Is a red pixel the consequence of the light being red, or the material being red?” asks Boubekeur. “You also don’t know the geometry from a photo—is it a piece of very shiny material on a curved surface or a rough material on a flat surface?”

To address these questions, the team developed two kinds of neural models—one that de-lights a user’s image by removing all the light and shadows, and another that reconstructs the geometry of an object based solely on the resulting de-lit 2D image.

These two models, coupled with other algorithms, power the Image-to-Material feature, allowing users do to something they never could before: take a single photo with a smartphone and, in one step, convert it instantly into a high-resolution digital material.

Creating digital materials with just a few words

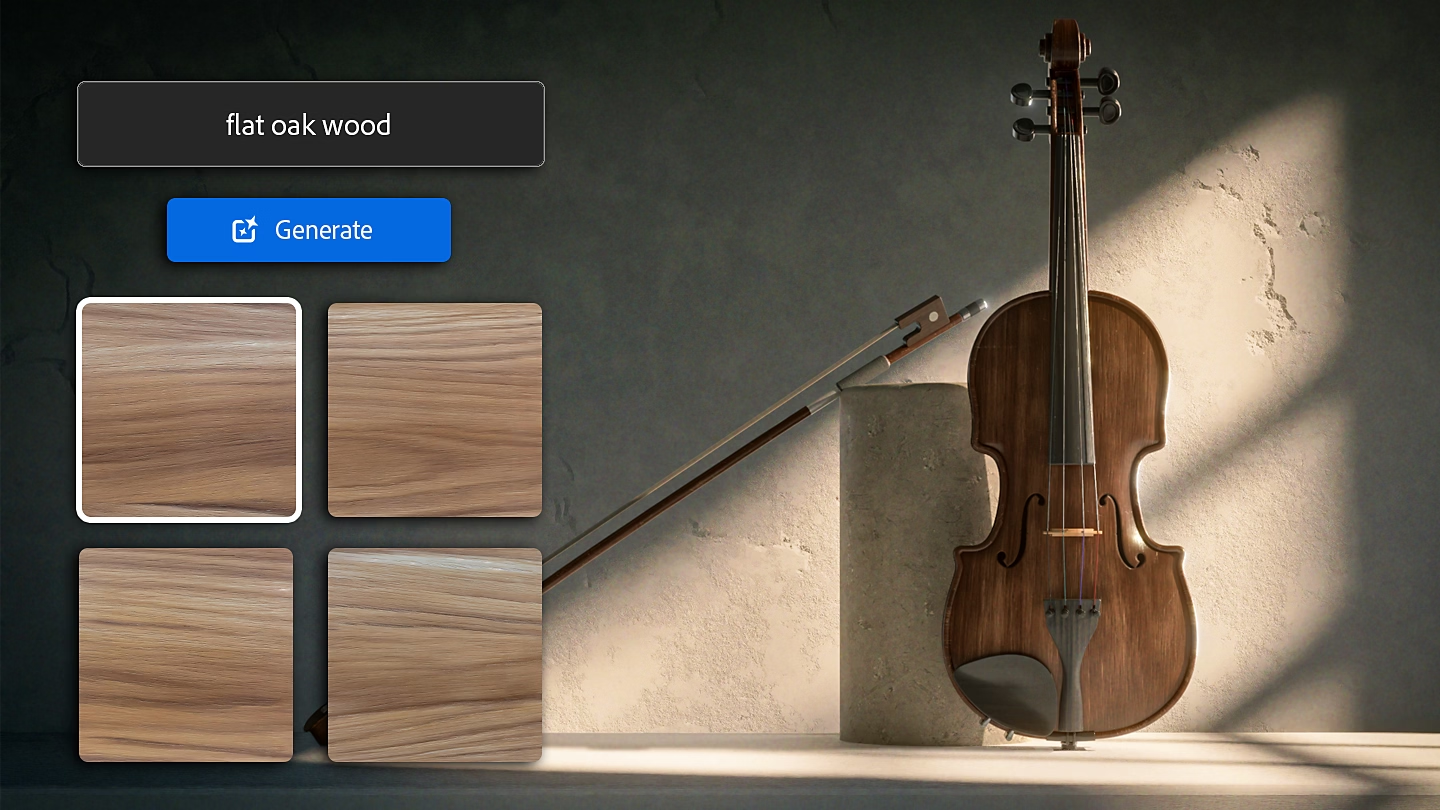

While Image-to-Material allows users to capture a material from the real world with a photo, the Text-to-Material’s approach focuses on generating imagery. For users, it’s similar to Adobe Firefly—they enter a text prompt to describe the material they need.

The first hurdle to creating the Text-to-Material feature was gathering a large enough data set of high-quality text descriptions of materials to train the models. After all, professionals aren’t simply looking for red plastic—they want to specify the sheen, give a detailed color description, and tweak the other properties, too.

So, the Adobe Research team tapped into the powerful text-to-image model from Firefly and fine-tuned it to generate textures rather than natural images. From there, they used the technology behind a recent major update of the Image-to-Material feature to turn a generated texture into a digital material. The result, Text-to-Material, allows users to simply describe the material they need to create it instantly.

Making digital materials work for users

Once a user has the perfect digital material, they also need it to be robust enough to translate into any context. “Let’s say you’re working on an image of a shiny new car,” says Boubekeur. “How do you accurately reproduce the actual appearance of the paint job in a given environment? This is called image synthesis, or rendering, and we’ve developed a lot of rendering algorithms that handle this challenging task, along with tools to edit materials so they map to the style of your creation.”

Digital materials also need to work well with a limited sample size. For example, if a user captures an image of a fabric, they’ll likely need more than they’ve captured to create an object. “Let’s say you want to use a photo of a fabric to create a dress—it’s going to be a much bigger piece of fabric than your photo contains. So, we’ve developed technology that allows you to reconstruct tile-able materials seamlessly. You can tile ten times without visible seams, all while addressing the complex geometry problems that come with applying a 2D material to a 3D shape,” says Boubekeur. With all these capabilities, digital materials users are able to control the entire process—from creating a digital material to editing it and applying it in three dimensions—with accuracy and in real time.

Turning ambitious research into products for Adobe’s users

To get the technology into the hands of users, Adobe Researchers collaborated with Adobe product teams in multiple ways, from co-designing with them, to working hand-in-hand with product engineers. For tasks that require more resources than a user can access on a laptop, the team developed web services that Adobe products can query—a strategy that helps users move more quickly while also allowing Adobe to continually improve the tools between product releases.

Adobe users can access the Image-to-Material and Text-to-Material features through several products, including Adobe Substance 3D Sampler, and use the resulting materials in applications like Substance 3D Painter, Photoshop, and Illustrator.

Adobe’s digital materials technology is also integrated into major game engines, rendering engines for movies and digital animation, CAD tools, and other design applications. And the Adobe Research team recently collaborated with HP to co-design HP Z Captis, a dedicated device that, thanks to a new software processing chain developed by Adobe Research and the Adobe Substance 3D Sampler engineering team, scans the properties of a material, including its base color and the way it reflects light, to capture extremely high-quality digital materials.

Boubekeur is excited to see what’s next as users apply materials to more complicated geometry, imagine new materials, and use their creations to design new kinds of experiences for their audiences. With these creative capabilities, Boubekeur adds, “Material has become the new color.”

Wondering what else is happening inside Adobe Research? Check out our latest news here.