Paul Guerrero, a Research Scientist at Adobe Research, thinks about the way a human looks at a scene to create new ways of editing images. We talked to him about shape analysis, the new editing tools that will change how we interact with AI-generated images, and some of his favorite things at Adobe Research.

Your work focuses on shape analysis for irregular structures using machine learning, optimization, and computational geometry. How did you get interested in this area, and how does it influence your work?

At the beginning of my academic career, I was looking for new challenges that weren’t well explored yet. Images are often defined as regular grids, and that area has already been explored quite well. The problem is that, with grids, the main way of editing is to do things like change the lighting values or the individual values in the grid.

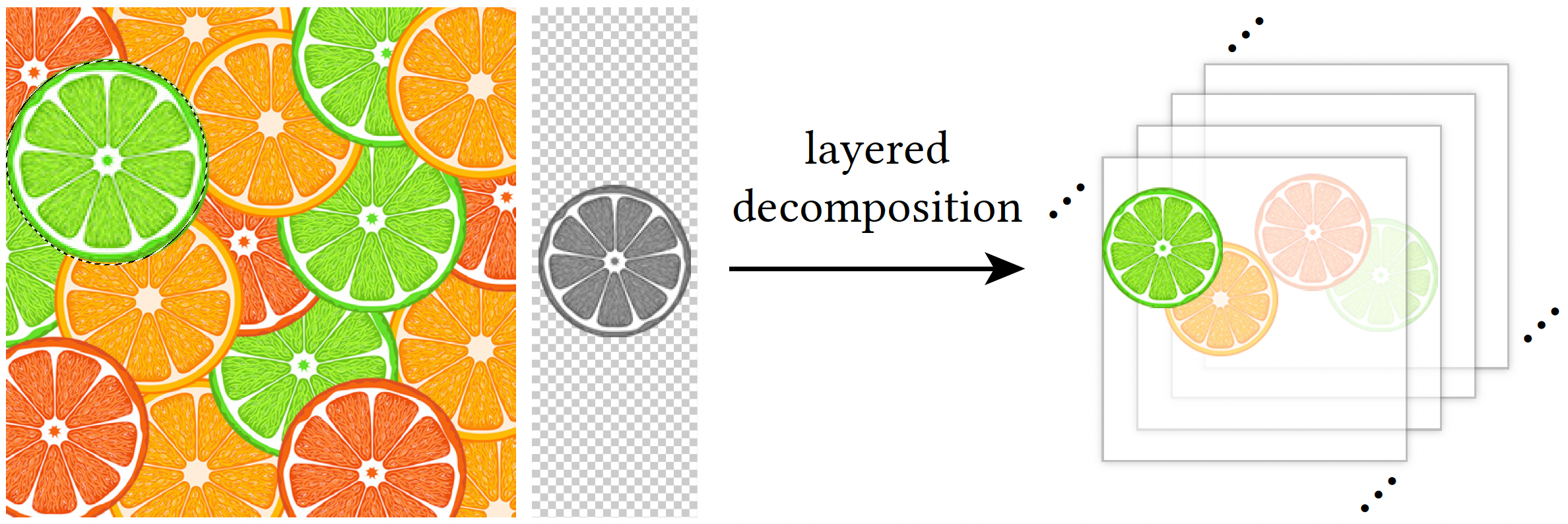

But when you look at a scene, you probably don’t think of individual lighting values or a grid of values. You think of a set of objects. So I became interested in studying irregular structures, such as graphs, meshes, or vector graphics. When we work with these types of structures, we can use them to define a scene as a set of objects, instead of a grid of values. For example, when you define a scene as a set of objects, you can take an object and move it around, or change properties of that object. You don’t need to find out which grid constitutes the object. It’s much easier to work with this kind of representation because it better aligns with your own way of thinking about the scene. An example is how we work with imagery of furniture in a room as we build a scene of an interior space. Understanding the structure of the furniture can make the image easier to control and interact with in an intuitive way.

Can you tell us a little about your current research?

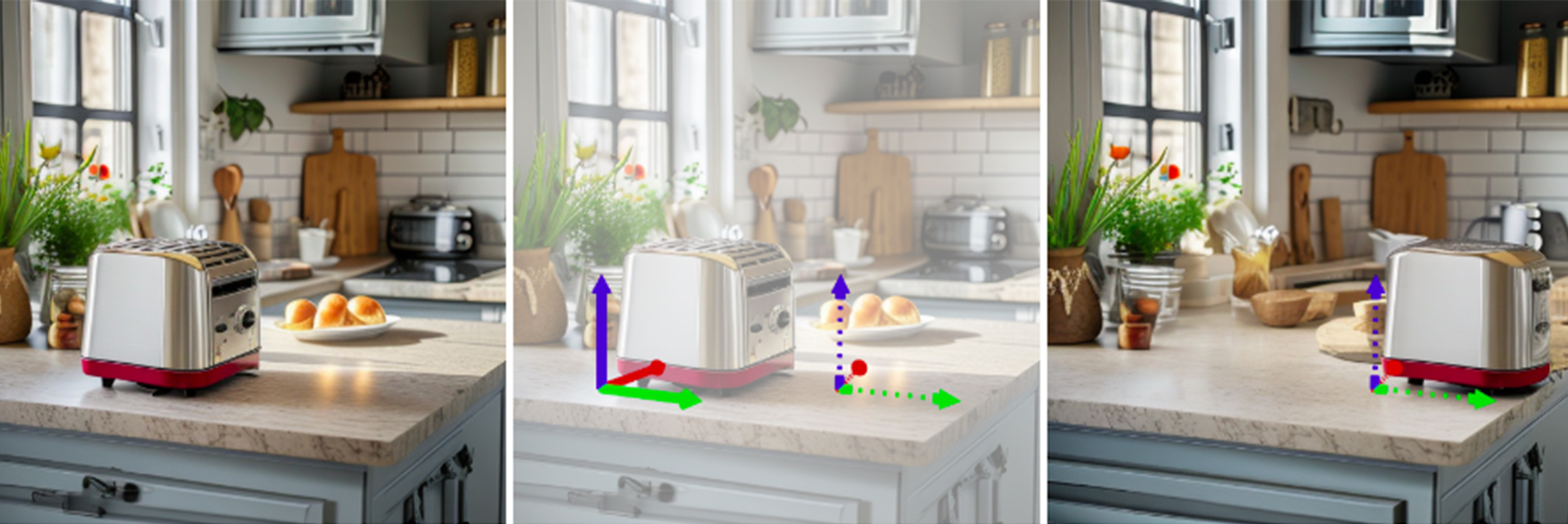

Imagine you have an image of a toaster on a table that you’ve generated with AI. You like it, but you want to move the toaster. You could write a different text prompt that says the toaster should be on the right, but that would also give you a completely different scene. So how can we allow you to move something, but still preserve the identity of the object? The background would remain the same, the toaster would remain the same, but you could make your edits. This is what I’ve been working on.

To allow people to edit the details of a scene, we’re developing technology that lets a user to just click the toaster and put it somewhere else. We need the technology to change the perspective of the toaster, so people can do things like putting the toaster behind another object. Another main challenge is to keep the lighting realistic. For example, if you move the toaster underneath a lamp, obviously its lighting and shadows need to change. This is all part of research we’ve published recently.

I’m also working on what we call procedural models. A procedural model is like a small program that lets you input parameters in order to output visual content. Imagine you’re working on an image with wood. The procedural model can take in parameters like the grain and age of the wood, or what type of wood it is, and present these parameters as sliders or dropdowns that let you change the wood to suit your image. These models have been around for a long time, but one challenge is that it’s quite hard to generate them for a given image. So we have been working on automatically creating editing parameters based on an image a user provides. So, say a user provides a photo with a wood material, we want to automatically create the program that provides sliders for the different wood parameters. We have some initial methods that can do this for many cases.

What are you most excited about in your research field at the moment? What kinds of problems interest you the most?

I’m interested in what’s next for generative AI. Text-to-image models and text-to-3D models are quite exciting because they can create very realistic images, and they make content creation more accessible to a wider audience. Anyone can just type in text and create a 3D scene or image.

But there is still a lot of work to be done to allow users to edit the details once they’ve created something with generative AI. It’s quite exciting to think about how we can provide ways to control the content.

What will these controls look like? Could users describe what they want to control, and the technology would automatically generate the corresponding controls for them? These are interesting questions.

How do you think about Adobe’s customers and their pain points? How does that impact your research?

Our product teams are close to customers, so we hear from them about customer needs for specific products, which is very helpful. I also think that one of the exciting roles of Adobe Research is to think beyond the current modalities and toward completely different editing paradigms for the future. Many of the new paradigms will circumvent existing pain points altogether.

What do you love most about working for Adobe Research?

My favorite thing is being able to explore new research directions. Another advantage is getting an inside look at new products as they are being developed. It’s interesting and inspirational to see the directions people are exploring. And I love getting feedback on the research I’m doing from product teams—and the people who eventually use the products.

Wondering what else is happening inside Adobe Research? Check out our latest news here.