Even as an undergraduate, Srikrishna Karanam, Research Scientist with Adobe Research in Bangalore, India, was thinking about how to use machine learning to solve real-world problems. Now he’s using the latest technology to make it easy for solo entrepreneurs and small businesses to give their visual materials an on-brand look and feel.

We talked to Karanam about his early research, the new On-brand Recolor feature in Adobe Express, and the future of on-brand generation.

Your research interests include video analytics, computer vision, and machine learning. How did you first get interested in these areas?

Looking back, I think it was my undergraduate course in signal processing and probability that really got me fascinated with machine learning and computer vision. As we learned about the foundations of modern machine learning, I kept looking for ways to apply the concepts to real problems. That’s something that has always interested me—getting things to work in the real world.

I decided to go to grad school at Rensselaer Polytechnic Institute (RPI)—which was also the first time I came to the US from India. My research was about building algorithms and systems for automated video analytics, and I had the opportunity to deploy and test them in the field. After I completed my MS and PhD, I was eager to come back home, and Adobe Research was at the top of my list for job opportunities. I knew it was an environment where researchers can do research, publish, and build real systems.

At Adobe Research, you’re working on a really interesting machine learning application in the real world: generative tools that make it easier to create on-brand marketing materials. How do these tools help Adobe users?

We find that customers who aren’t professional designers want to bring assets, like photos and design layouts, from external sources into Express—and they want them to be on-brand. But this is very tricky and requires lots of manual fine-tuning. For example, adjusting the colors in an image takes a lot of effort. So we wanted to make this process easier.

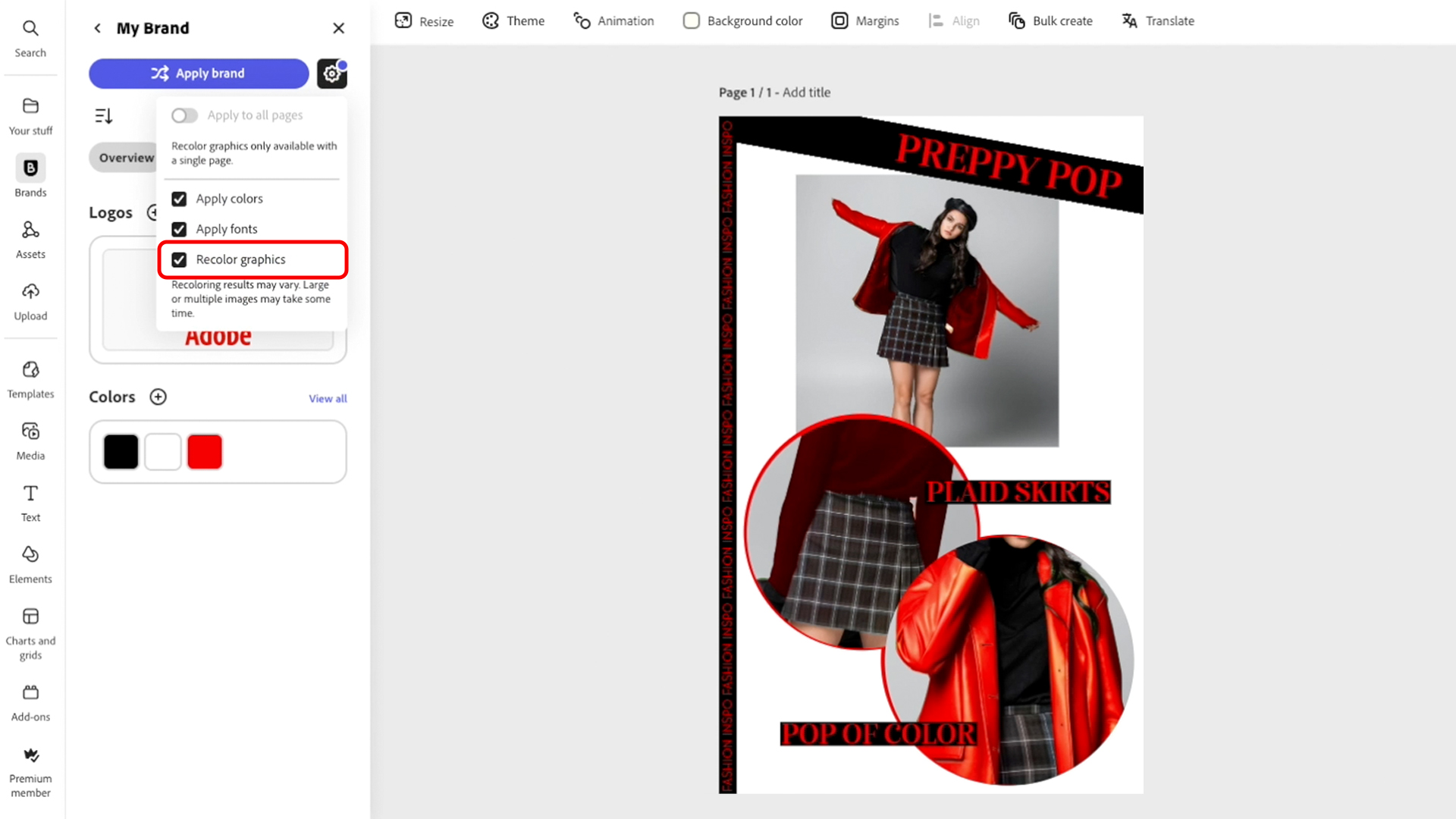

We recently collaborated with the Express product team to create a new feature, On-brand Recolor, released at Adobe MAX 2024. The feature uses AI to thoughtfully update graphics with a brand’s color palette.

On-brand Recolor is designed for people who aren’t creative professionals. Can you tell us how you create tools with this group of users in mind?

We work closely with our colleagues on the product teams since they’re experts in what their users need. With Express, users are often solo entrepreneurs or small business owners, and they’re not creative professionals. They want to quickly create materials that reflect their brands, so they’re looking for one-click experiences.

On top of being quick and easy-to-use, users also need our models to be very accurate. For example, in the case of On-brand Recolor we need to be very precise and smart about which areas to recolor. Users want a good result with their first click—they don’t have a lot of time to spend editing images, and they may not have experience with more complex editing tools.

Can you tell us about the research and technology behind new on-brand generation tools?

Recent advances in machine learning, in particular generative modeling for images and vision-language understanding, have made the work we’re doing on on-brand generation possible.

With On-brand Recolor, for example, a key challenge we faced was determining which regions should be recolored in an image. We knew that users wouldn’t want to change all of the colors in an image, especially the natural elements. And we knew that asking users to manually specify which regions to recolor would be a cumbersome workflow. So we tackled this challenge by using AI to label the regions we knew shouldn’t be recolored, such as skin, water, and grass. Our next innovation was training a generative model conditioned on color palettes from a user’s brand kit and then applying it to the remaining parts of the image.

When I think back to a decade ago, when the deep-learning era was just picking up, it’s just amazing that we are able to do all of this now—I couldn’t have imagined it.

What do you think is next for on-brand generation?

We have some immediate next steps. Right now, the On-brand Recolor feature is available for single-page templates or a user-selected single page, and we’re working on extending the model to multiple pages. That means reducing the time it takes to process photos and illustrations from multiple pages.

Further into the future, we want to build technology to meet a full spectrum of branding needs, from creation of the brand to the application of it in lots of different ways. For example, On-brand Recolor uses colors from a user’s brand kit and applies them to graphics. But there are many other attributes, such as font and tone of voice, that constitute a brand’s identity. With On-brand Recolor, we’re helping to pave the way for features that can automatically update any of those attributes with just a click.

Wondering what else is happening inside Adobe Research? Check out our latest news here.