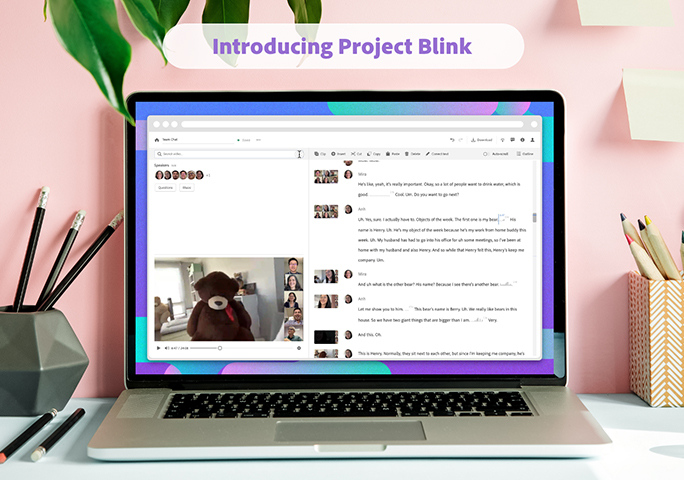

It started with an idea — that video editing would be easier and better if users could simply search for words, images, people, and moments in a video then cut and paste just as they do with a text document. To turn this vision into a reality, a cross-disciplinary team led by Adobe Research came together to build the new AI-powered, web-based video editing app, Project Blink.

“Project Blink is an experimental way of doing video editing. We’re allowing people to edit by content rather than going frame-by-frame,” explains Research Scientist Joy Kim. “We’ve removed the barrier of having to watch a video over and over to find the parts you want to edit. And we’ve created a new way to find the story inside a video.” The public got its first glimpse of Project Blink during a Sneak at the most recent Adobe MAX. The app is now available in beta.

How Project Blink Works

To make this new kind of editing possible, Project Blink transforms the content of a video into a text-based, searchable transcript that includes who’s speaking and what they’re saying. Users can also search the video for objects (a bear or a car, for example), sounds (such as laughter), emotions, speakers, and more, to find when they appear. Then they can edit the video by cutting, pasting, and deleting moments within the transcript, just as they do in a text document.

Project Blink automatically trims clips for smooth transitions. It can even remove unnecessary “ums,” “ahs,” and pauses.

Project Blink was designed with several uses in mind, including helping people who aren’t creative professionals to create videos, making it easier to review and analyze videos, and giving video editors a quick, simple way to develop a first cut before refining a video in Premiere. The tool could also be valuable for livestreamers who edit longer videos down for sharing, and anyone who records a meeting and needs to either search back through what was said, or compile the highlights.

The Origins of Project Blink

Over the years, Adobe has received requests for transcript-based editing tools for both video and audio—tools that would make editors’ lives much easier.

At the same time Adobe researchers, often in collaboration with academia, have for a long time been working on how AI might transform video creation. This research included deep dives into finding moments in video with natural language, detecting filler words, enhancing speech, recommeding music for videos, and adding b-roll. One long standing collaboration has been with Stanford professor Maneesh Agrawala, who inspired early explorations in text-based editing of audio and video. Together, Adobe researchers, Prof. Agrawala, and his students have developed text-based editing systems for audio stories, interview footage, narrated videos, and dialogue driven scenes.

So, with research and user requests all pointing the way, Adobe Researchers assembled a dream team of experts to build Project Blink. The group included researchers and product engineers, specialists in machine learning, artificial intelligence, and human-computer interaction, and designers and user researchers. (Check out our blog post on “boomerangs” who returned to Adobe Research to focus on Project Blink.)

“We were one of the first Adobe Research-based teams to take on a project this big, and to assemble such a diverse team,” says Research Scientist Xue Bai. “It was such a thrill to collaborate on this project and release it to Adobe users.”

Project Blink “in the Wild”

When Project Blink was revealed at Adobe MAX this past fall, it was still in beta. So the team opened up access slowly, adding new users every week to get feedback. So far, beta users have raved about how Project Blink helps them save time and analyze videos in new ways.

As a UX researcher using Project Blink explained, “I’ve been testing out using Project Blink for analyzing user interviews. I’ve found it extremely useful to be able to pull relevant video clips out of long videos for the purpose of building research presentations.”

Another beta user added, “The best thing about Project Blink for me is that I can scroll through the text in order to see what is being said and where; to be able to look through the video visually instead of having to listen to the whole thing is such a time saver — I used to listen through my entire video at high speeds for editing which was tedious; doing this also made it easy to miss things if I wasn’t fully paying attention.”

Thousands of users have signed up to use Project Blink. Now, the team is listening closely to feedback so they can continue to refine the technology.

“At first, we wanted to see how Project Blink would do out in the wild. Our early goal was to find at least 50 people who’d be sad if Blink was no longer available,” remembers Research Scientist Valentina Shin. “We’ve already exceeded our early hopes and we’re learning so much about how people are using it. I’m excited to see how Project Blink will evolve.”

Want to learn more about Project Blink, or give it a try? Find everything you need to know here.

Contributors :

Current Blink Core Members: Joel Brandt, Aseem Agarwala, Mira Dontcheva, Jovan Popovic, Joy Kim, Valentina Shin, Dingzeyu Li, Xue Bai, Jui-Hsien Wang, Hanieh Deilamsalehy, Ailie Fraser, Justin Salamon, Karrie Karahalios, Haoran Cai, Pankaj Nathani, Anh Truong, Esme Xu, Kim Pimmel, Muj Syed, and Ray Ma

Past Blink Core Members: Seth Walker, Cuong Nguyen, Chelsea Myers, Morgan Evans, Najika Yoo, Leo Zhicheng Liu, Tim Ganter, and Aleksander Ficek

Contributors from outside the Blink Core Team: Ali Aminian, Aashish Misraa, Xiaozhen Xue, Nick Bryan, Yu Wang, Xiaozhen Xue, Fabian David Caba Heilbron, Ajinkya Kale, Ritiz Tambi, Juan Pablo Caceres, Ge Zhu, Oriol Nieto, Trung Bui, Naveen Marri, and Sachin Kelkar