As Scott Cohen, Senior Principal Scientist, celebrates 25 years at Adobe Research, he thinks back to 1999. He’d just wrapped up his PhD in computer science at Stanford and joined the Advanced Technology Group (ATG), a precursor to Adobe Research. The company was growing faster than ever, you’d often find the founders mingling with the team in the cafeteria, and Cohen was developing technology to segment images so that Adobe products could do more for users.

Over the years, Cohen has had a front row seat—and often a lead role—in developing big ideas and new tools that have fundamentally changed the way people create. He and his teams have developed technological innovations that are part of products from Adobe Photoshop to After Effects and Lightroom. He’s had a leadership role in big exploratory projects seeking new ways to edit images, including Project Stardust, and he leads a team at the forefront of research on vision and language. Along the way, Cohen has contributed to 125 U.S. patents (179 patents worldwide), published 46 papers at the top computer vision conferences CVPR, ICCV, and ECCV, mentored more than 50 interns, and even delivered a MAX Sneak called Color Chameleon.

We talked with Cohen about his work, and some of the big changes he’s seen at Adobe Research.

What was it like to step into Adobe in 1999?

Adobe was much smaller back then. The founders, John and Chuck, were both research-minded, so they often joined us at the ATG lunch table, which was really exciting. And I remember that after each company meeting I would almost sprint back to the office, feeling so inspired to do something great.

Back then, Adobe Research was still ATG. We didn’t have our own floor—they’d seat us with the product team we were most likely to serve. I started on the Photoshop floor, and I was struck right away by how small the core team was to have built such an amazing product. I could see from day one how amazing and dedicated these people were. At the time, we were putting in long hours to ship Photoshop 6.0. I was working on an exciting feature called the Extract plug-in for masking out objects from an image so you could do things like put an object on a new background in a believable way.

How has Adobe Research changed over the years?

As we grew, and became Adobe Research, we started to encourage research publications and more university collaborations, and we began to work with interns. It’s become a great combination of serving Adobe’s customers, doing cutting-edge research, and being part of the academic community.

Along the way, there were huge new Adobe products, like Acrobat and Lightroom, and the company grew through acquisitions into areas like the digital marketing space. So our research started to have an effect on the whole ecosystem for creators and the enterprise. We also had opportunities to work on bigger projects with more investments.

Can you tell us more about your research area—image segmentation, editing, and understanding—and some of the most exciting challenges in the field?

Twenty-five years ago, image editing software was all about the pixels—but of course pixels aren’t how a person sees the world. When you look at a picture, you think of all the people and objects, how they’re laid out in space, and what’s happening in the photo. I always wanted to work on the problem of meeting users where they are, and seeing the way they see, so they can edit and find images better.

This requires tackling one of the most interesting problems in computer vision—figuring out how to go from a digital image to understanding the image. You start with the image, which is a 2D array of pixels. Each pixel typically has three numbers, one each for red, green, and blue, but you want to understand what’s in the image and then be able to segment objects to edit them. If it’s a picture of a man walking a dog, you have to figure out how to go from an array of three numbers at each pixel to knowing which pixels are part of the dog and which pixels are part of the man. That’s a huge challenge!

How did you build editing tools in 1999, and how has AI changed the process?

Before the big breakthroughs in deep learning in the early 2010s, we were hand-designing features to understand images. We’d calculate gradients and make a histogram to figure out what part of an image was a cat or a chair, for example. We would try to codify object definitions: What really defines a chair or cat? As you can imagine, it was difficult to do this robustly.

Then machine learning turned the research on its head. We were able to take these handcrafted features and apply machine learning to make the best decisions. But that was still sub-optimal because results were limited by the quality of the handcrafted features. The breakthrough new research was training the models using labeled data to figure out the optimal features for predicting what an object is or whatever task you wanted to perform. It was a revolution, and Adobe Research was at the forefront of leading that deep learning revolution within the company. Suddenly we had new opportunities to make robust tools that could make it so much easier for people to do things in Photoshop and other Adobe products. Since then, the strides we’ve made with machine learning and AI are just amazing—they drive product features that are of so much value to our customers.

Can you tell us about a favorite product feature you’ve worked on?

From my individual contributor days, I’d say my favorite was the quick selection tool. It was one of my earliest tech transfers, back in the early 2000s, and it’s still being used today. Before the quick selection tool, if you wanted to select something in an image with the state-of-the-art research method, you’d draw a stroke in the middle and then wait a second, and it would show you the pixels it thought were part of the thing. If it accidentally got something from the background, you’d draw a negative stroke to say, ‘I don’t want that.’ And then you’d wait a second and see if that corrected it.

Our innovation made the process live, so it would just show you the selection immediately while the user is brushing. It was a novel experience on top of amazing technology, and I got to collaborate with some of the Photoshop legends to help develop it.

How about a favorite big project you’ve led?

I would say my favorite is Project Stardust. It’s a next-generation image editing project with an ambitious goal: to revolutionize image editing with an object-centric paradigm. It was another step toward letting users edit based on objects, not pixels.

We used image understanding and image generation to allow users to perform very sophisticated edits easily. It’s my favorite, in part, because it involved collaboration across Adobe Research, digital imaging, and design, and we all knew that the user experience is as important as the technology underneath the hood. This group effort continues to this day. In fact, Photoshop Web just shipped an object-centric editing feature, which is an idea that came from the Stardust work.

You also headed two research groups focused on computer vision within Adobe Research. Can you tell us a bit about them?

My first group made significant advances in image segmentation, image depth prediction, and image search in Adobe products and in publications using deep learning to improve our semantic understanding of images. My second—and current—group is adding natural language understanding expertise to develop methods that allow our users to just say what they want to edit, find, or generate in natural language.

For both groups, the goal was, and still is, to use image and language understanding to revolutionize image editing, search, and generation, and make our products communicate with users at the semantic level. These are the kinds of tools that the next generation of creatives will expect and demand.

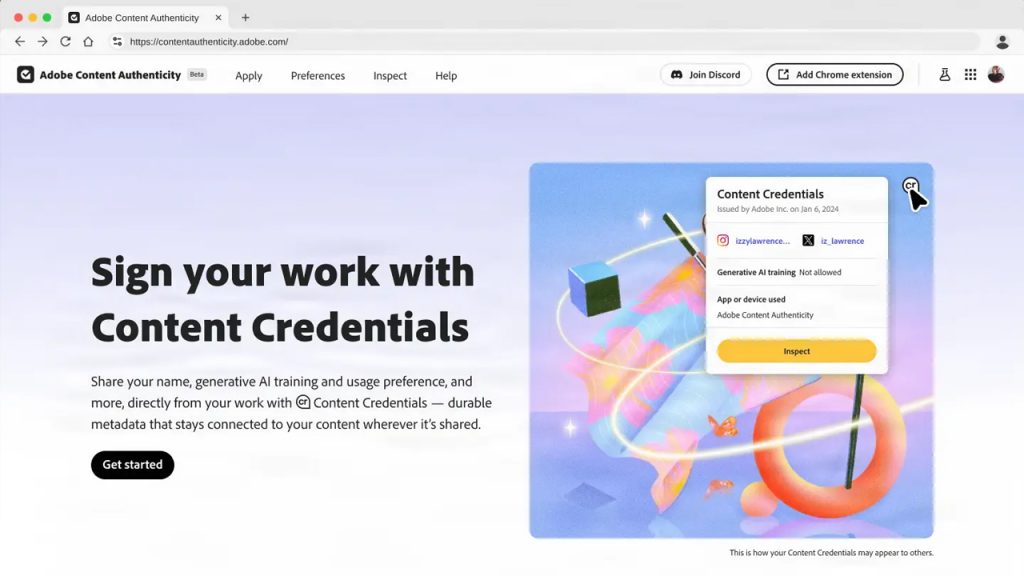

I’m super proud of my teams’ pioneering initiatives in big, bold projects including the image segmentation behind Photoshop’s latest selection tools, visual search for Adobe Stock, and the joint image-language understanding and synthesis technologies crucial for Firefly image generation and Photoshop Generative Fill. And I’m proud that we contribute technologies that validate and underpin the Content Authenticity Initiative, where many companies are joining us in adopting a standard for tracking the provenance of images—which is all about building trust and transparency in the age of AI.

On top of your research accomplishments, you’ve mentored more than 50 interns. What’s the best part of being a mentor?

It’s so satisfying to see interns’ development, and to have had a small hand in it—and I learn an incredible amount from them as well. They go on to run their own research labs and to become professors and stars in the industry and invent new things. I think that’s one of the legacies of Adobe Research—and my personal legacy, too.

Twenty-five years later, what do you still love about working at Adobe Research?

I can give you the most important answer in two words: the people. Without a doubt, these are some of the most gifted and dedicated people I’ve ever encountered.

I also feel extremely fortunate to contribute to products that people use to make their living. I think back to 2016, when I got to give a Sneak at Adobe MAX. At the party afterwards, so many creative professionals thanked me. It made me feel so good to see that people’s lives are affected in such a positive way by our work.

Overall, I’d say that Adobe Research is a place of ideas, which is why we can do such amazing things. And it’s a place where researchers can be heroes.

Wondering what else is happening inside Adobe Research? Check out our latest news here.