At Adobe, we develop new Natural Language Processing (NLP) methods for collecting, processing, analyzing, and understanding large amounts of natural language data. The resulting NLP models power diverse applications across Adobe’s document and creative products including document intelligence, knowledge acquisition, natural language search across text, images, and videos, and intelligent conversational assistants.

The 2021 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2021) was held virtually from June 6 to 11, 2021. NAACL is one of the top research conferences on natural language processing and allied fields. In recent years, deep learning approaches and multimodal content understanding methods have made a significant impact at this conference.

Adobe presented a total of twelve papers (nine at the main conference; three at workshops) at the conference on research topics including question answering, information extraction, NLP fundamentals, linguistic style, multi-modal processing, and knowledge graphs.

Adobe Research has several research opportunities across locations. Please check out openings for full-time positions at Adobe Research, Adobe Research India, and Adobe Careers.

Question Answering

Being able to answer questions requires a deep understanding of both the question and the material needed to provide the answer whether documents, images, or structured knowledge graphs and data bases. Question answering for highly specialized domains, such as medicine, provide a further challenge due to the need for domain adaptation and knowledge. Adobe’s NLP researchers have a strong focus in this area as reflected by the six papers presented in this area.

X-METRA-ADA: Cross-lingual Meta-Transfer learning Adaptation to Natural Language Understanding and Question Answering

Meryem M’hamdi, Doo Soon Kim, Franck Dernoncourt, Trung Bui, Xiang Ren, Jonathan May

KPQA: A Metric for Generative Question Answering Using Keyphrase Weights

Hwanhee Lee, Seunghyun Yoon, Franck Dernoncourt, Doo Soon Kim, Trung Bui, Joongbo Shin, Kyomin Jung

MIMOQA: Multimodal Input Multimodal Output Question Answering

Hrituraj Singh, Anshul Nasery, Denil Mehta, Aishwarya Agarwal, Jatin Lamba, Balaji Vasan Srinivasan

NAACL SRW 2021

Open-Domain Question Answering with Pre-Constructed Question Spaces

Jinfeng Xiao, Lidan Wang, Franck Dernoncourt, Trung Bui, Tong Sun, Jiawei Han

NAACL BioNLP 2021

UCSD-Adobe at MEDIQA 2021: Transfer Learning and Answer Sentence Selection for Medical Summarization

Khalil Mrini, Franck Dernoncourt, Seunghyun Yoon, Trung Bui, Walter Chang, Emilia Farcas, Ndapa Nakashole

NAACL 2021 NLP for Medical Conversations (NLPMC) workshop

Joint Summarization-Entailment Optimization for Consumer Health Question Understanding.

Khalil Mrini, Franck Dernoncourt, Walter Chang, Emilia Farcas, Ndapa Nakashole

NLP Fundamentals

The underlying techniques used in NLP are rapidly evolving. Choosing the best technique for a given problem and application can be complex, with each approach having different strengths and weaknesses. One particularly interesting domain is how to combine the precision of classic symbolic NLP techniques with the robustness of the newer deep learning techniques.

A Context-Dependent Gated Module for Incorporating Symbolic Semantics into Event Coreference Resolution.

Tuan Lai, Heng Ji, Trung Bui, Quan Hung Tran, Franck Dernoncourt, Walter Chang

Towards Interpreting and Mitigating Shortcut Learning Behavior of NLU models.

Mengnan Du, Varun Manjunatha, Rajiv Jain, Ruchi Deshpande, Franck Dernoncourt, Jiuxiang Gu, Tong Sun, Xia Hu

Linguistic Style

Usage of natural language reflects not only the core semantic meaning but also the style that the speaker or writer wishes to convey. Linguistic style is extremely important to appeal to and be clearly understood by specific audiences. It is also crucial for understanding the broader context of the utterance including the speaker’s sentiment.

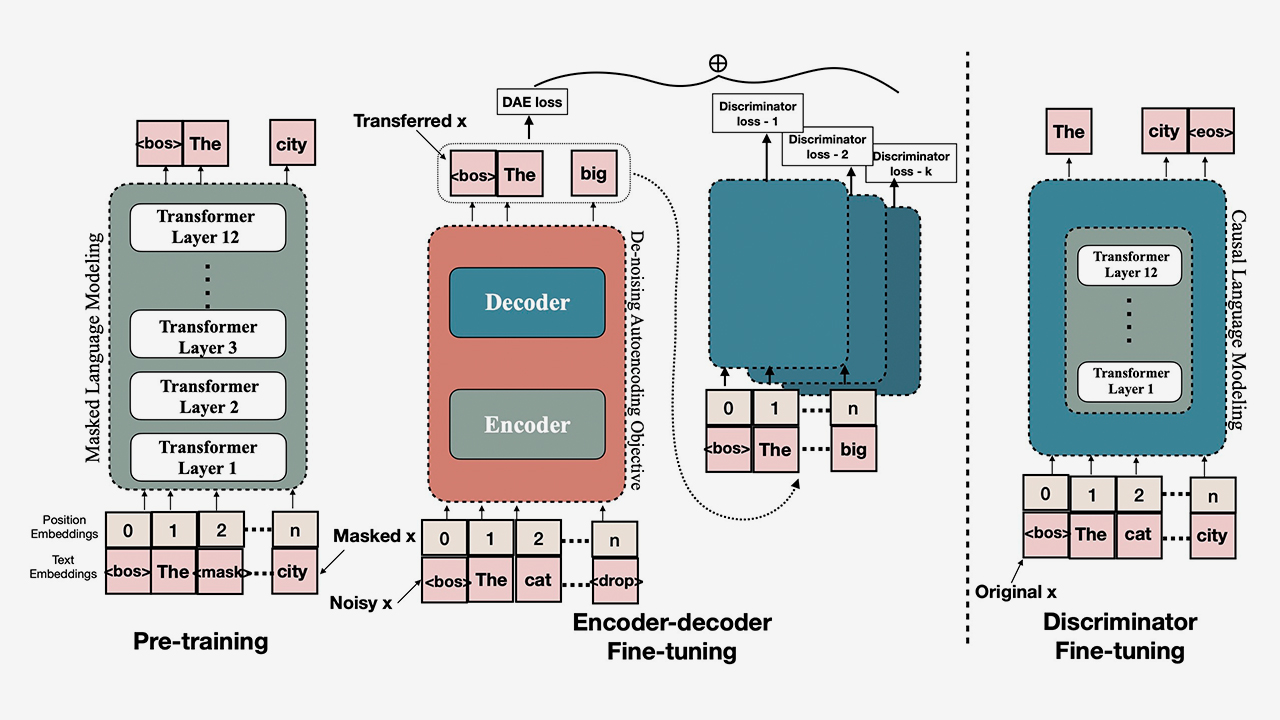

Multi-Style Transfer with Discriminative Feedback on Disjoint Corpus

Navita Goyal, Balaji Vasan Srinivasan, Anandhavelu N, Abhilasha Sancheti

WikiTalkEdit: A Dataset for modeling Editors’ behaviors on Wikipedia

Kokil Jaidka, Andrea Ceolin, Iknoor Singh, Niyati Chhaya, Lyle Ungar

Multi- and Cross-modal

Multi-modal models are a relatively new area for NAACL, traditionally being more the focus on vision processing conferences. Multi-modal models encoding both language and image features in the same abstract space have made massive strides in the past few years. Although often focusing on photographs, multi-modal models are also crucial in understanding complex document elements such as tables and forms.

TABBIE: Pretrained Representations of Tabular Data

Hiroshi Iida, Dung Thai, Varun Manjunatha, Mohit Iyyer

Knowledge Graphs

Knowledge graphs allow us to encode broader knowledge in a way that computers can rapidly process and reason over. Scaling these knowledge graphs to cover more data at high accuracy is a key unsolved problem.

Edge: Enriching Knowledge Graph Embeddings with External Text

Saed Rezayi, Handong Zhao, Sungchul Kim, Ryan Rossi, Nedim Lipka, Sheng Li