Adobe has launched Firefly, a new family of generative AI models for creative expression. The technology is now available in beta, and in addition, Firefly is now powering a new tool in the Photoshop desktop beta app, Generative Fill.

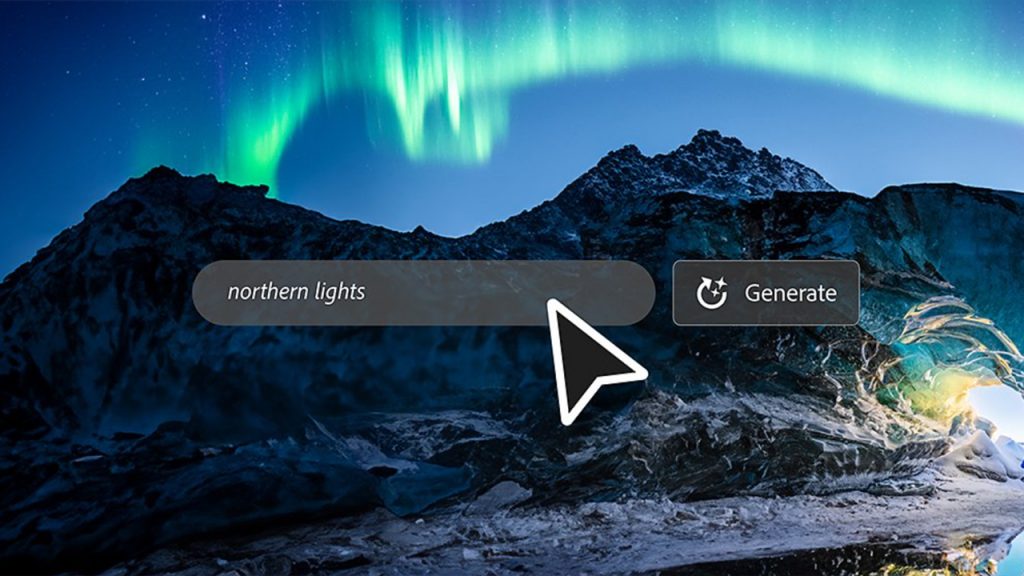

With Firefly, users can bring a creative vision to life by describing it in their own words. They can instantly iterate though variations, amplifying their creativity and their imaginations. Work by Adobe Research has helped to power the technology inside Firefly, and the research team is currently developing features for Firefly’s next phase.

How Adobe Research made it possible

Before there was Firefly, there were numerous collaborative research projects and published research papers that pioneered the generative AI approach. The Adobe Research team has co-authored more than 10 research papers related to Firefly technology. In addition, Adobe Research has participated in over 30 Firefly-related research projects at Adobe, many of which are ongoing today.

How is Firefly different from other kinds of generative AI?

The Firefly model includes a powerful style engine for color, tone, lighting, and composition. Users can leverage Firefly alongside their existing workflows in Adobe Creative Cloud, Document Cloud, Experience Cloud, and Adobe Express. And Firefly technology allows users to add, extend, and remove content from images with simple text prompts in the new Generative Fill in Photoshop beta.

Firefly was designed as a creative co-pilot, with commercial use in mind. Unlike some other generative AI technologies, it was trained on professional-grade, licensed images in Adobe Stock along with openly licensed content and public domain content where the copyright has expired.

Adobe worked closely with the creative community to build Firefly, and the company is committed to developing generative AI responsibly to support the creative process and give creators every advantage. To honor this commitment, Adobe is developing a compensation model for Stock contributors, helping create industry standards through the Content Authenticity Initiative (CAI), and working toward a universal “Do Not Train” tag so creators can decide whether to allow AI models to train on their work.

Adobe Research is working on the next phase of generative AI

Adobe Research is researching ways to make Firefly more accessible to people of all skill levels, and more customizable to each artist’s style. To that end, here are a handful of experimental projects that the research team is working on:

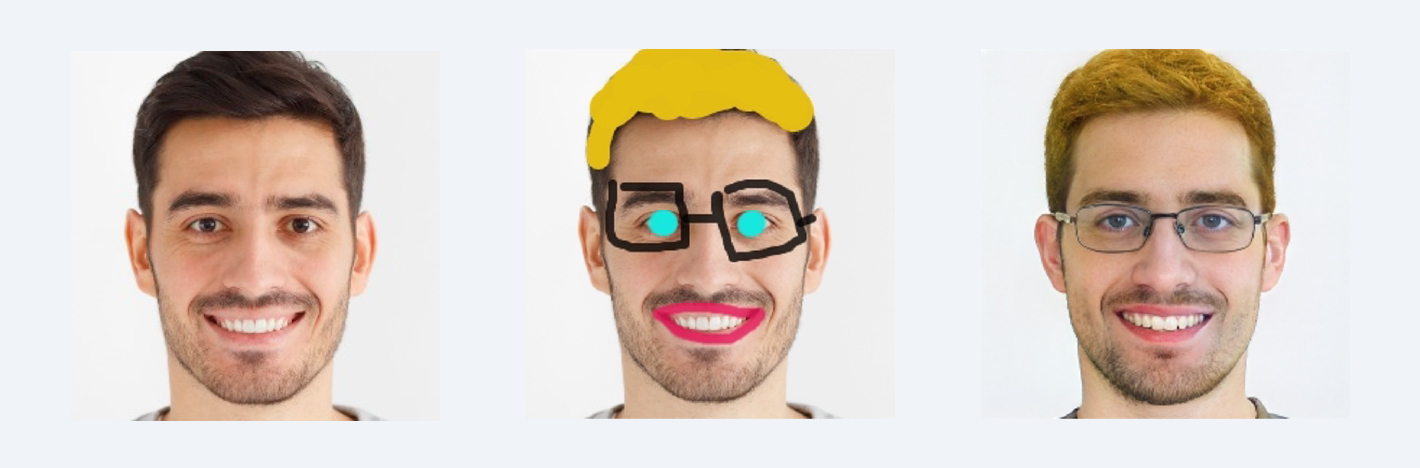

With progressive controllable image synthesis, users could edit images using simple marks, such as lines drawn with a stylus, to achieve changes in just a few brushstrokes.

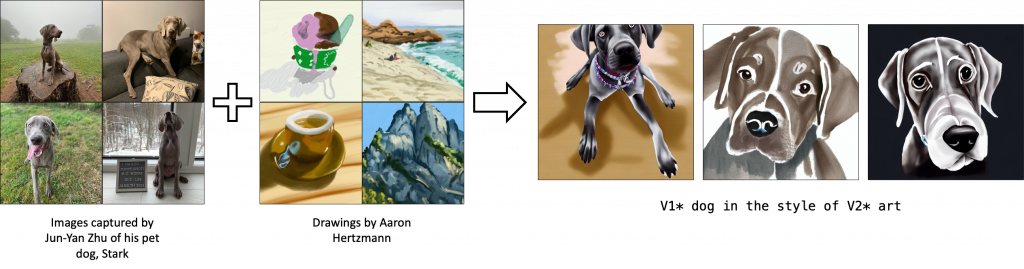

Customizable diffusion could allow creators to apply their own unique style to their work by choosing which images inform the generative AI. This could give users more control and make it easier to create a unified style across a body of work.

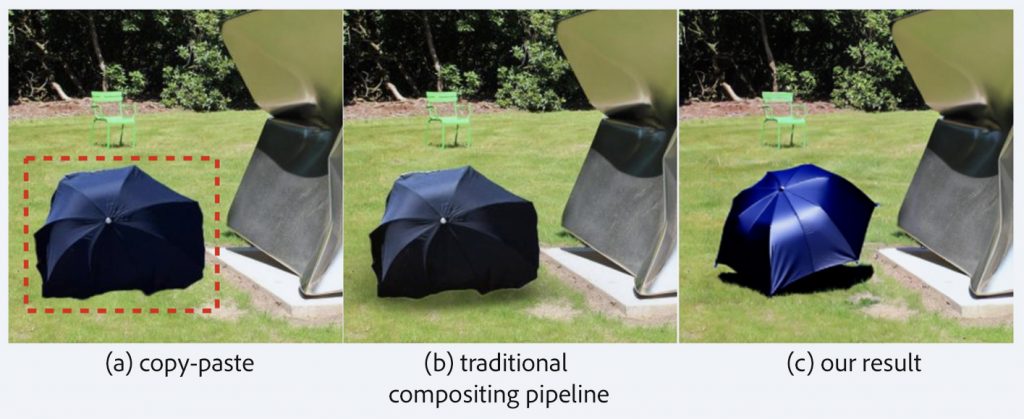

Generative image compositing could make it much easier to seamlessly combine elements from two or more images. The AI could help with color, shading, perspective, shadows, and more, making a usually difficult, multi-step process simple and straightforward.

Read about the technology behind Firefly and the path ahead here, and try out the Firefly beta here. Learn more about Generative Fill powered by Firefly here and download the Photoshop beta desktop app using instructions here to try it out!