Vishy Swaminathan, senior principal scientist at Adobe Research, has long been a force to be reckoned with in the field of video research. During his 13-year career at Adobe, Swaminathan and colleagues helped lay the groundwork for how we receive and watch streaming videos. He shares his journey into video technology, both on the creative and marketing sides, in this Q&A.

How did your career path lead you to Adobe Research?

Early in my career, I focused on the delivery of video, how it is consumed, and how it looks to the user. Before joining Adobe Research, I was a senior researcher at Sun Microsystems Laboratories.

What attracted me to Adobe was that when I joined, 80 percent of research done in the labs had product impact, and I wanted the chance to have that kind of impact. In general, at Adobe, it’s a closer, shorter loop than at other places.

Working with engineers, scientists, and product teams both at Sun and at Adobe has made me respect every part of that chain of how you get a product out. Innovation happens everywhere, and it can come from every part of the company. The way Adobe embraces this idea resonates really well with me. I am proud of what we do in research, but also extremely respectful of what’s done in other parts of the company to get a product out and delight customers.

What advances in video have you worked on that you are most proud of?

One of the biggest things that I worked on with a group of colleagues from Adobe Research and product teams was making video delivery completely seamless to end-users, at massive scale. We worked on HTTP dynamic streaming to deliver videos. Adobe was one of the first companies that supported HTTP streaming, and we, as a research team, played an important role in doing that. (It was initially used in the Flash media server and in the Primetime suite of products.) Today, you see streaming videos over HTTP everywhere, it is the norm.

As a side note, I remember there was a time when I was working on streaming videos over the internet even before I came to Adobe. I heard people say that nobody in their right mind would pay money for this! They said, “I could just watch TV if I wanted to see videos!” I’m proud that the work we did as a research community then enabled the ubiquitous streaming we have today.

Another major research project we did was to bring videos to iOS devices in a secure way to enable paid video services. We worked on protected streaming for iOS, and it was super successful. Then, we moved onto data-driven video recommendations for publishers. This was the first service that varies the recommendation based on your context: whether you were at home, on a train, or at work. It gave very positive results. Currently, we are working on bringing both the data driven insights and the HTTP streaming lessons that we learned in the video domain to the immersive world. We are working on improving the quality of VR and AR experiences by predicting which part of the scene the user is likely to be looking at next using consumption data, and efficiently streaming the predicted objects or parts of the scene at the highest quality.

How has your work on video evolved in recent years?

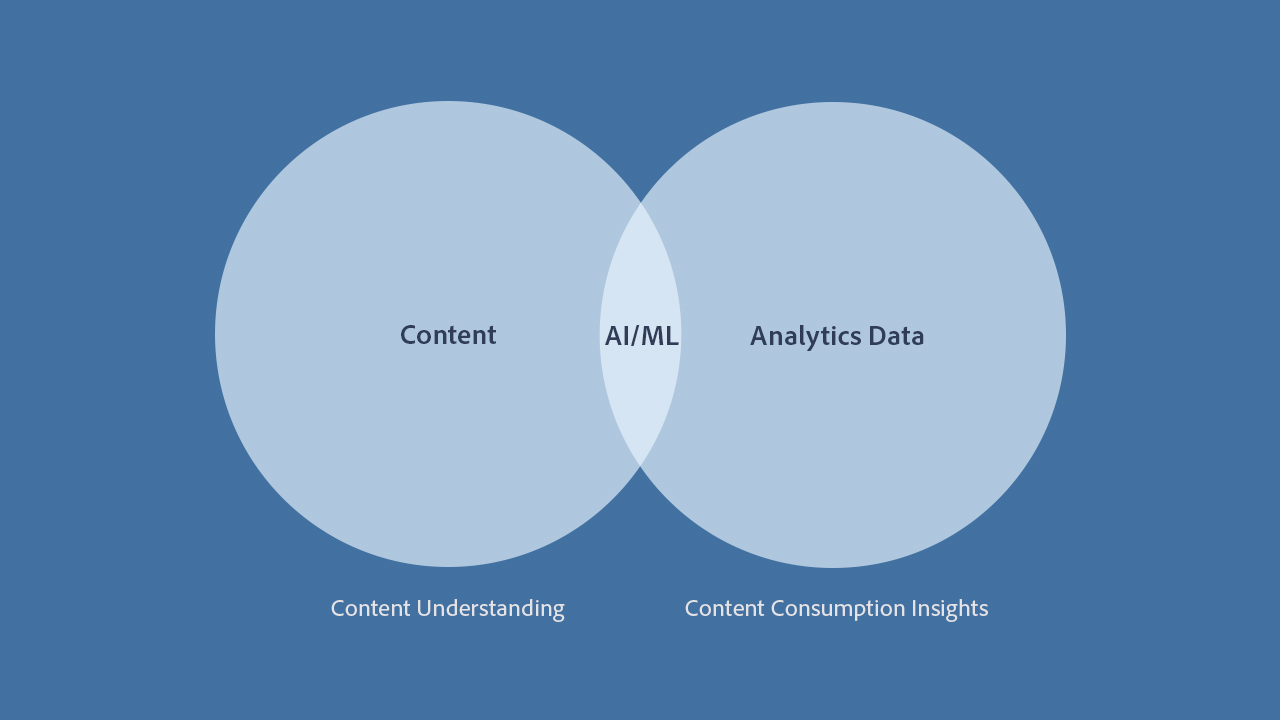

Initially, for 10 plus years, my focus was on entertainment video content for the publishers who created high-value video assets, such as TV shows. They monetize their video content directly. More recently, I’ve also focused on Adobe customers who make consumer products, for instance a shoe brand. They use videos as supporting assets to monetize their products in marketing campaigns. We have gone beyond just the video content and have worked with the user behavioral or consumption data from those campaigns. We not only use the audio-visual features of the videos, but also incorporate the data about how they are consumed and used, to make data-driven decisions about the campaign.

As I worked on this, I realized that this data could help create better or more effective video content, especially for marketing. You might need variants for different audiences, for example, so the information that we learn about that audience’s preferences could help define new content. We call this “closing the loop.” To do this well, we need to do it in a short period of time to be useful for the creator. That’s why now, we are looking at real-time algorithms to help make decisions about campaigns and content. Using AI trained on historical data, as you create content, you could get real-time feedback on how well it’s going to do. At Adobe, we have many opportunities to bridge the creative and marketing sides of our business.

What kinds of data can help create better video content?

Imagine if exactly the same message could be posted on a social media platform for a range of audiences—for example, a PSA asking people, “wear a mask when you go out.” If there’s enough data about audience segments, we may be able to understand that the music associated with that video, or the colors, or text, that would appeal to me is very different from what would appeal to a teenager. You could take the core video and adapt it with the right music, colors, beats and tones that would immediately grab my interest, and then automatically change it to appeal to a teenager instead.

This transformation, at a high level, is actually possible now. And as these algorithms mature, it could be uniquely tuned to a small number of people, or even individualized to a particular person. Already, enormous advances have been made in AI-driven content creation.

Are you working on AI-based solutions?

Yes. We’ve discovered that the work people do in the end-to-end spectrum of video creation is 20 percent inspiration and 80 percent perspiration. This drudgery inhibits creativity. The more we can use AI to remove drudgery from creators’ work —such as making different versions of the same ad for different segments—the more time they will have to create bigger, better things. I want to emphasize that we want to use AI to augment creativity. We will still need creators, because algorithms depend on historical data. Creators try to do what has not been done before, absolutely new things. They want to truly innovate. That’s what they should be spending time on, instead of formulaic stuff that can be done by using data.

What other trends are you seeing in the creation of and delivery of video?

What’s been missed are the video consumption patterns of people—that is, how people view videos differently. We still make movies aimed at segments of audience, say an age group, and we still make ads for 30-second TV slots. But there is more to understand. How would I consume this news video and accompanying article, versus someone else? This hasn’t been studied enough.

I see a trend around modifying or even generating content that relates to these preferences. For example, a person who prefers videos might be presented with a video that has a text overlay to help drive the message. And someone who likes to read text might receive it augmented with images and video content.

There are tons and tons of opportunities in video creation and video consumption—this is an open field for innovation.

Based on an interview with Meredith Alexander Kunz, Adobe Research