Beyond Subtitles: Captioning and Visualizing Non-speech Sounds to Improve Accessibility of User-Generated Videos

ASSETS 2022

Publication date: October 26, 2022

Oliver Alonzo, Hijung Valentina Shin, Dingzeyu Li

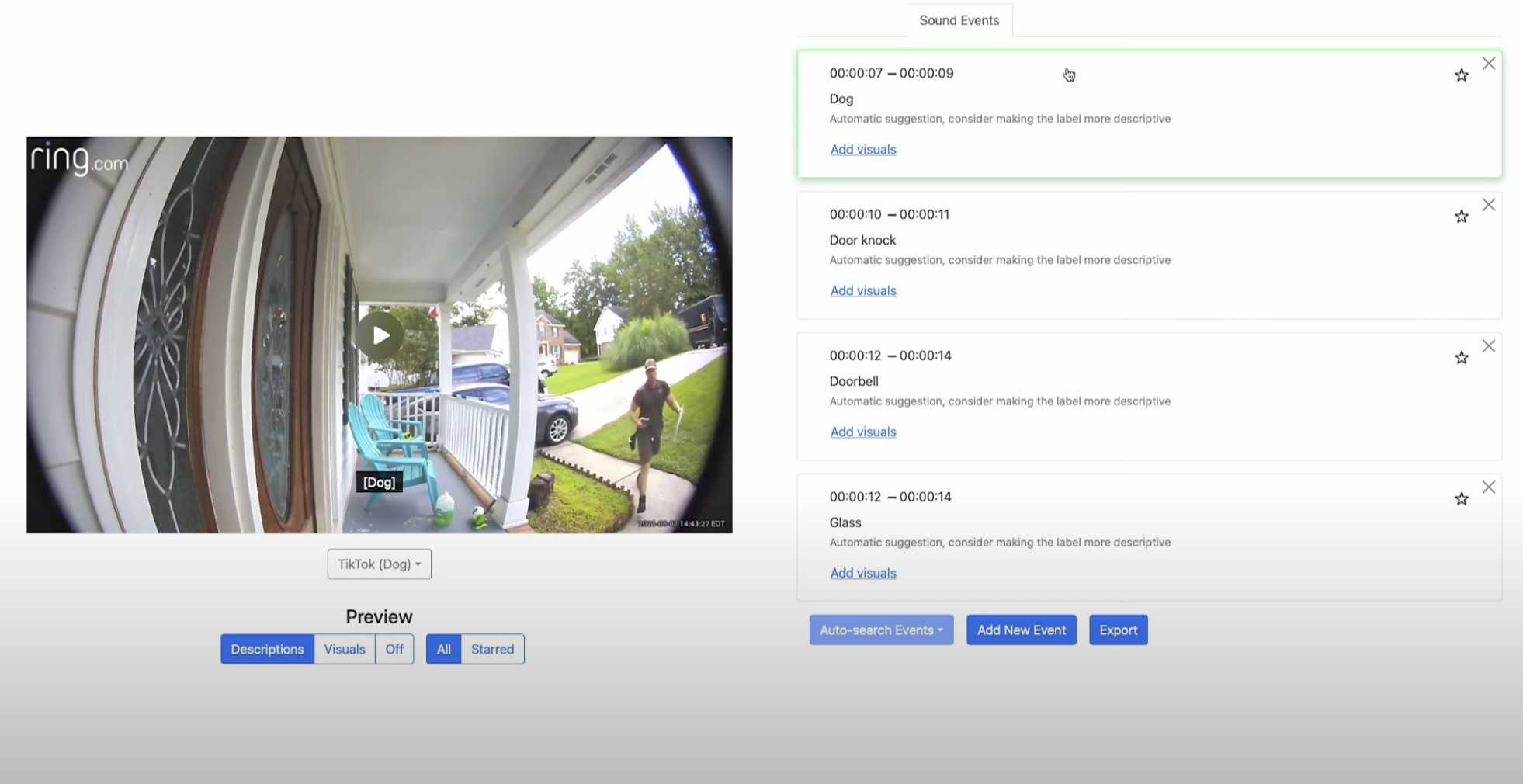

Captioning provides access to sounds in audio-visual content for people who are Deaf or Hard-of-hearing (DHH). As user-generated content in online videos grows in prevalence, researchers have explored using automatic speech recognition (ASR) to automate captioning. However, definitions of captions (as compared to subtitles) include non-speech sounds, which ASR typically does not capture as it focuses on speech. Thus, we explore DHH viewers’ and hearing video creators’ perspectives on captioning non-speech sounds in user-generated online videos using text or graphics. Formative interviews with 11 DHH participants informed the design and implementation of a prototype interface for authoring text-based and graphic captions using automatic sound event detection, which was then evaluated with 10 hearing video creators. Our findings include identifying DHH viewers’ interests in having important non-speech sounds included in captions, as well as various criteria for sound selection and the appropriateness of text-based versus graphic captions of non-speech sounds. Our findings also include hearing creators’ requirements for automatic tools to assist them in captioning non-speech sounds.

Learn More