When marketers have questions about their data or their workflows, or when they have questions about how to create audiences and customer journeys, they can now simply use natural language to ask for help with these tasks from the AI Assistant in Adobe Experience Platform (AEP). The new technology, developed by Adobe Research, in collaboration with the AEP engineering team, provides time-saving responses grounded in product knowledge and customer data backed by citations and an explanation of the logic behind each answer—to help users with complicated, data-intensive tasks.

The innovation was announced during the opening keynote of this year’s Adobe Summit, the world’s largest digital experience conference. But before that, the AI Assistant was years in the making.

How Adobe Research embedded with the AEP team to build the AI Assistant

More than two years ago, Adobe Research began collaborating closely with the AEP product team. “We wanted to embed with the product team to really understand this complex platform, along with customer pain points, the variety of customer use cases, and the power of the data that exists on the platform. It was all about making sure our future-looking research would be as impactful as possible,” says Vishy Swaminathan, Senior Principal Scientist for Adobe Research.

Even before this collaboration started, the researchers were focused on efficiency, reliability, cost, and ways to make sure customers could bring all their data into the platform as smoothly as possible. As large language models (LLM) became more capable, and as the team learned more about AEP customers’ most pressing needs, researchers began developing the AI Assistant, including additional pre- and post-processing models that enable a productive user experience grounded in product knowledge and customer’s data.

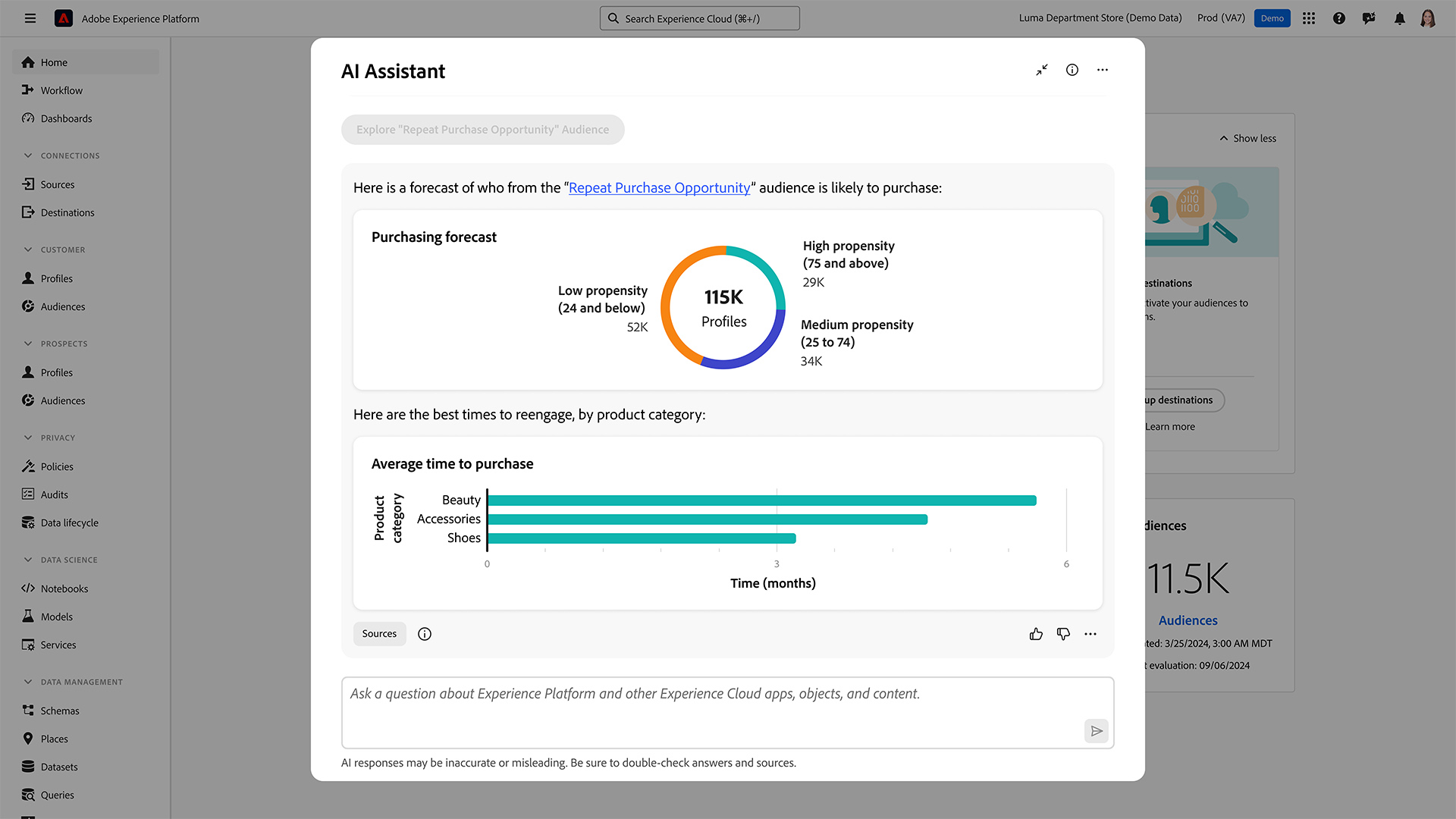

The result is an AI Assistant that works with the data and the applications in AEP, allowing marketers, data scientists, and analysts to access and understand Adobe product knowledge, to troubleshoot, and to learn more. Researchers are now pushing the boundaries towards what will become the next generation of technologies to fuel the AI Assistant by automating tasks, simulating outcomes, and generating audiences and journeys—all using natural language.

“Using the AI Assistant is similar to other chat experiences,” explains Eunyee Koh, Principal Scientist. “But it’s tailored to enterprise AEP users who want to dig into their own data to understand more about their business and their customers.”

For example, imagine a marketer who is trying to determine what duplicate audiences they have, since they don’t want to over-communicate with their base. They can simply ask the AI Assistant if any duplicate audiences exist. The AI Assistant will look through thousands of audiences, and respond with just the duplicates.

“Our AEP platform captures trillions of transactions per day for our customers. Now we’ve taken something really simple—interactions in natural language—and put it on top of all the data and applications in the AEP platform. This lets our users get accurate, actionable marketing insights quickly,” explains Swaminathan.

How the Adobe Research team put trust at the center of the model

The first challenge in building the AI Assistant was developing a fine-tuned model that could detect a user’s intent accurately and rewrite the question with proper context. From there, it needed to retrieve relevant document snippets to give the LLM the right context to generate a response.

As many users know, LLM-generated content sometimes misinterprets or mischaracterizes information, or “hallucinates” information on its own. Adobe Researchers understood that they had to build a much more accurate system for Adobe customers. “To make the AI Assistant useful for our enterprise customers—who need real answers—we needed guardrails, including methods to prevent hallucinations, along with explanations and citations that let users know that the information they’re receiving is accurate,” explains Saayan Mitra, Principal Research Scientist.

To do this, researchers developed a series of post-processing steps that prevent potentially inaccurate, ungrounded responses. The model also attributes sources to each answer it gives, so users can understand exactly how the AI Assistant arrived at its response.

“Our requirements were quite stringent. When we put an LLM on top of enterprise applications and data, a wrong answer is a serious error. Our research focused on minimizing errors from passing through,” says Swaminathan.

Unveiling the AI Assistant to the world

When the new AI Assistant took center stage at Summit, and as people stopped by the AI Assistant booth to get demos, the responses were overwhelming. “They told us that the AI Assistant could save them weeks of searching through tutorials about how to do things. They were really excited about the efficiency of being able to get answers to what they need in just minutes,” says Koh.

“It was fantastic to see the overwhelming reaction, which ranged from happy to ecstatic,” adds Swaminathan, who spent time talking with customers during the demos at Summit. “What made me proud was all of the Adobe Research technology behind it.”

The AI Assistant is now available in the Adobe Experience Platform. Looking ahead, Swaminathan says the team plans to continue making AEP users’ experiences even more efficient. “I believe we’ve only scratched the surface in terms of the richness we can bring with the AI Assistant for the Adobe Experience Platform. In the future, we want our customers to be able to bounce ideas around with the AI Assistant so they can come to their preferred conclusions and kick off new projects, whether it’s a campaign, or detecting an anomaly, or anything else. They’ll be able to accomplish what they want to do much faster than they can today.”

Wondering what else is happening inside Adobe Research? Check out our latest news here.