By Aaron Hertzmann, Adobe Research

Adobe Research once again continued in its core mission of developing innovative new technologies this year, with Adobe authors publishing 82 research papers at the top computer vision and graphics conferences in 2018:

- CVPR 2018 (Conference on Computer Vision and Pattern Recognition): Adobe authors contributed to 30 papers

- SIGGRAPH 2018 (ACM Special Interest Group on Computer GRAPHics and Interactive Techniques): Adobe authors contributed to 12 papers, plus 4 Transactions on Graphics (TOG) papers

- ECCV 2018 (European Conference on Computer Vision): Adobe authors contributed to 27 papers

- SIGGRAPH Asia 2018: Adobe authors contributed to 9 papers

Nearly all of these papers came from university collaborations. Most were first-authored by university PhD students following successful summer internships working with Adobe researchers. (Find instructions on applying for a 2019 internship here.)

We also published actively in the fields of natural language processing, human-computer interaction, machine learning, and more; stay tuned for future posts about those papers. In 2018, across all fields, Adobe authors contributed to a total of more than 220 published research papers.

In addition, Adobe researchers received 9 best paper awards in 2018, at AAAI (Association for the Advancement of Artificial Intelligence), CHI (ACM CHI Conference on Human Factors in Computing Systems), SIGDIAL (ACL/ ISCA Special Interest Group on Discourse and Dialogue), and others.

Here is a sampling of Adobe Research’s 2018 papers in computer vision and graphics:

The Unreasonable Effectiveness of Deep Features as a Perceptual Metric

Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, Oliver Wang

CVPR 2018

[ Paper ] [ Project page] [GitHub] [Poster] [Adobe Research Blog] [Two Min Papers] [Bibtex]

Multi-Content GAN for Few-Shot Font Style Transfer

Samaneh Azadi, Matthew Fisher, Vladimir Kim, Zhaowen Wang, Eli Shechtman, Trevor Darrell

CVPR 2018

[Paper] [Github/Project] [Berkeley Blog] [MAX Sneak]

Disentangling Structure and Aesthetics for Style-Aware Image Completion

Andrew Gilbert, John Collomosse, Hailin Jin, Brian Price

CVPR 2018

[ Paper ] [ Project Page ]

Visual to Sound: Generating Natural Sound for Videos in the Wild

Yipin Zhou, Zhaowen Wang, Chen Fang, Trung Bui, Tamara L. Berg

CVPR 2018

[ Paper ] [ Project ] [Adobe Research Blog]

PairedCycleGAN: Asymmetric Style Transfer for Applying and Removing Makeup

H. Chang, J. Lu, F. Yu, A. Finkelstein

CVPR 2018 (oral)

[ Paper ]

Flow-Grounded Spatial-Temporal Video Prediction from Still Images

Yijun Li, Chen Fang, Jimei Yang, Zhaowen Wang, Xin Lu, Ming-Hsuan Yang

ECCV 2018

[ Paper ] [ Project ]

Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image

Zhengqin Li, Kalyan Sunkavalli, Manmohan Chandraker

ECCV 2018 (oral)

[ Paper ]

Scene-Aware Audio for 360° Videos

Dingzeyu Li, Timothy R. Langlois, Changxi Zheng

SIGGRAPH 2018

[ Paper ] [ Video ] [ Project Page ]

Mode-Adaptive Neural Networks for Quadruped Motion Control

He Zhang, Sebastian Starke, Taku Komura, Jun Saito

SIGGRAPH 2018

[ Paper ] [ Project ] [ Code ] [ Video ] [ News ]

Learning Local Shape Descriptors with View-Based Convolutional Neural Networks

Haibin Huang, Evangelos Kalogerakis, Siddhartha Chaudhuri, Duygu Ceylan, Vladimir Kim, Ersin Yumer

TOG 2018 (presented at SIGGRAPH 2018)

[ Project ] [ Paper ]

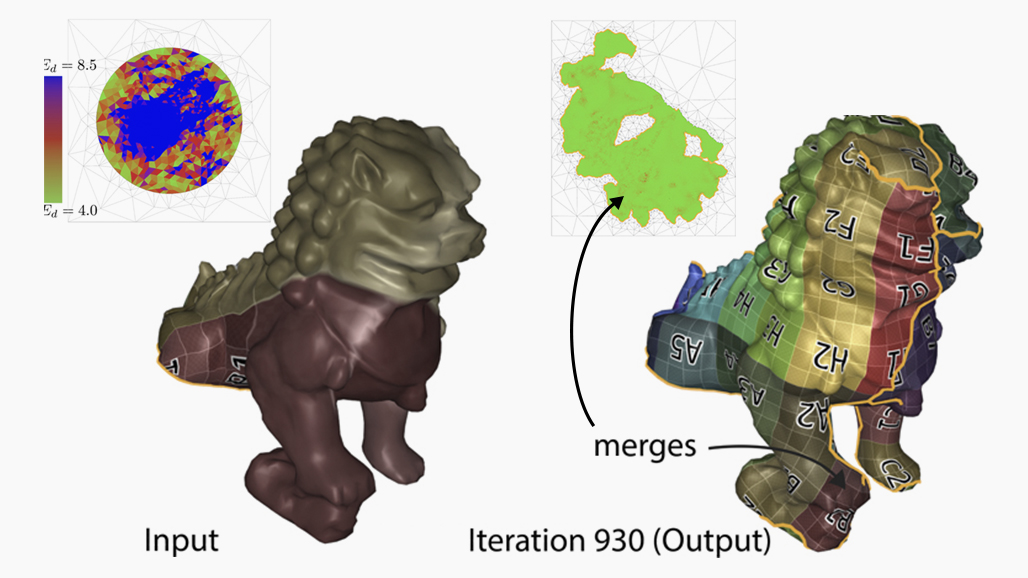

Image provided by Minchen Li, Danny M. Kaufman, Vladimir G. Kim, Justin Solomon, Alla Sheffer. Model provided courtesy of INRIA by the AIM@SHAPE Repository. © ACM 2018. This is the author’s version of the work. It is posted here by permission of ACM for your personal use. Not for redistribution.