“In the coming age, computer graphics will become an integral part of our language.” – Ken Perlin (New York University professor)

Augmented and mixed-reality technologies enable us to enhance and extend our perception of reality by incorporating virtual graphics into real-world scenes. One simple but powerful way to augment a scene is to blend dynamic graphics with live action footage of real people performing. In the past, this technique was used as a special effect for music videos, scientific documentaries, and instructional materials incorporated in the post-processing stage. Manipulating graphics in real-time is now becoming more popular in weather forecasts, live television shows, and, more recently, social media apps with video overlays.

As live-streaming becomes an increasingly powerful cultural phenomenon, an Adobe Research team is exploring how to enhance these real-time presentations with interactive graphics to create a powerful new storytelling environment. Traditionally, crafting such an interactive and expressive performance typically required technical programming or highly-specialized tools tailored for experts.

Our approach is different, and could open up this kind of presentation to a much wider range of people.

Our core contribution is a flexible, direct manipulation user interface (UI) that enables both amateurs and experts to craft augmented reality presentations.

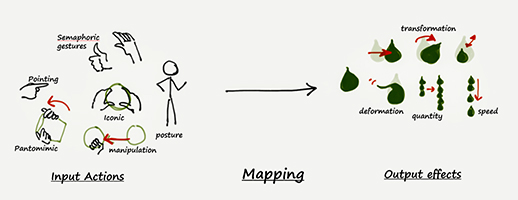

Using our system, the presenter prepares the slides beforehand by importing the graphical elements and mapping input actions (such as gestures and postures) to output graphical effects using nodes, edges, and pins. Then, in presentation mode, the presenter interacts with the graphical elements in real-time by triggering and interacting with the graphical elements with gestures and postures. This kind of augmented presentation leverages users’ innate, everyday skills to enhance his or her communication capabilities with the audience.

Our system leverages the rich gestural (from direct manipulation to abstract communications) and postural language of humans to interact with graphical elements.

Our system leverages the rich gestural (from direct manipulation to abstract communications) and postural language of humans to interact with graphical elements.

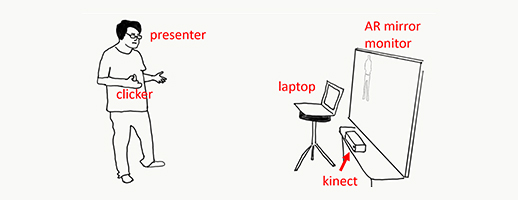

Hardware setup consists of a Microsoft Kinect (for skeletal tracking), a monitor as Augmented Reality mirror, and a wearable clicker for controls (e.g., next, previous). Our system legerages machine learning algorithms for computer vision and gesture recognition.

Hardware setup consists of a Microsoft Kinect (for skeletal tracking), a monitor as Augmented Reality mirror, and a wearable clicker for controls (e.g., next, previous). Our system legerages machine learning algorithms for computer vision and gesture recognition.

By simplifying the mapping between gestures, postures, and their corresponding output effects, our UI enables users to create customized, rich interactions with the graphical elements.

Our user study with amateurs and professionals demonstrates the potential usage and unique aspects of this augmented medium for storytelling and presentation across a range of application domains. The resulting live presentations include an academic research paper, a cooking tutorial, interior planning, meditation tips, and educational content.

We anticipate that interactive graphics will help shape our real-time virtual communications capabilities by empowering people to leverage whole-body language, speech, and context.

For more details about our approach, please see our project video (below) and paper.

Project video:

Contributors:

Rubaiat Habib, Li-Yi Wei, Wilmot Li (Adobe Research)

Nazmus Saquib (MIT Media Lab)