Part 1: Image Filters

by Aaron Hertzmann, Adobe Research

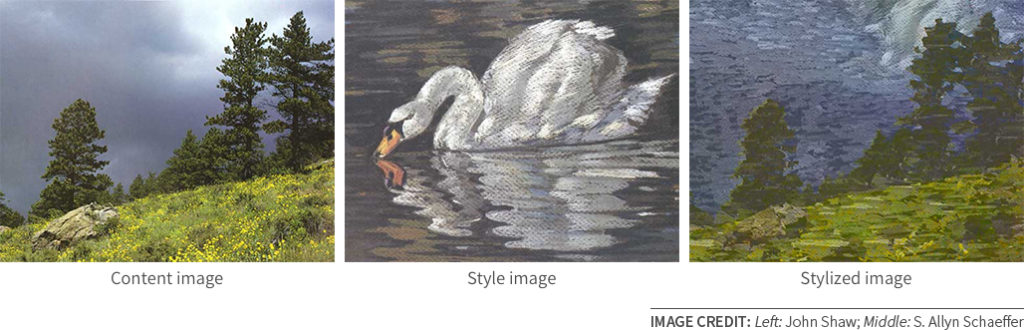

This three-part series of blog posts tells the story (so far) of image stylization algorithms. Image stylization is the process of taking an image as input, and producing a stylized version as output. Here’s an example in which a photograph is transformed by the style automatically extracted from a pastel drawing by a professional artist:

In this first post, I’ll describe some of the earliest stylization methods. These methods were procedural: people tried to write code that directly implemented various artistic styles. This led to very controllable algorithms and insights, but, at some point, it became too difficult to capture the complex nuances of an artist’s style. To address these issues, researchers began to develop various example-based methods that work from examples.

In the second post, I’ll describe patch-based algorithms, including the Image Analogies algorithm which created the image above, descendents of which are still being developed.

In the third post, I’ll describe the new neural network algorithms for stylization that have become immensely popular. Fast neural network stylization algorithms have appeared in many prominent apps and websites, like Prisma and Facebook Live, for use in making photos and videos more “artistic.” People are asking what these algorithms suggest about the relationship of AI and art, even though these algorithms are conceptually quite simple.

There is still much to do to understand these different algorithms, what they tell us about art, and make them even better tools for visual communication and expression. Besides making everyone’s selfies look cooler, image stylization has the potential to enable new forms of communication, building on traditional artistic techniques. Traditional hand-painted artwork and hand-drawn animation can be expressive and beautiful in unique ways, and stylization algorithms could enable new opportunities for creative expression in these old styles or new versions of them. These tools could be useful in different ways both to end-users and to professional artists.

The presentation here represents my own view on this subject. The references here are not comprehensive; I am leaving out some significant research in order to streamline the story.

Procedural Filters

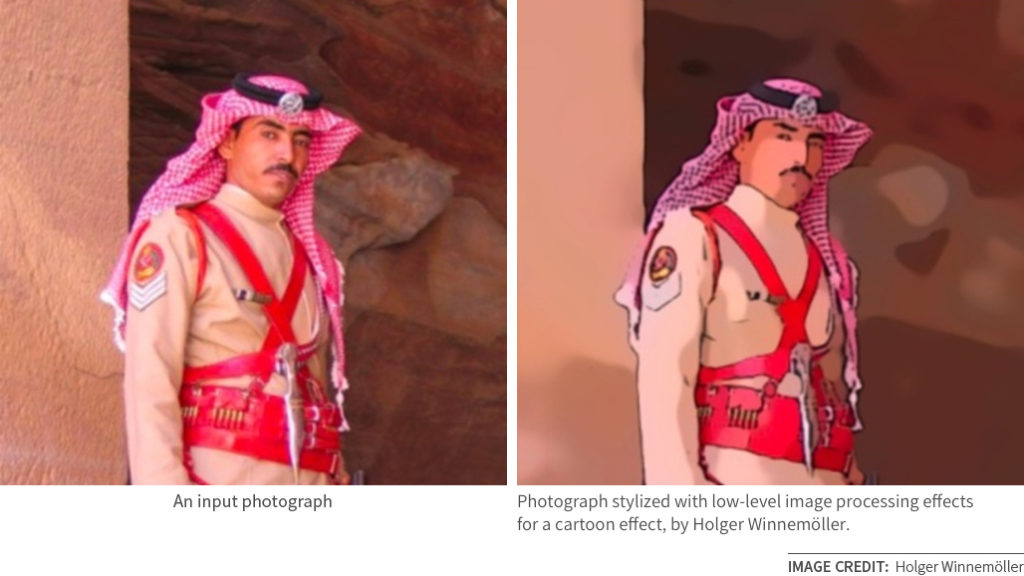

The earliest stylization algorithms arose from classical signal processing. Simply running an edge detector or a “smart blur” (anisotropic diffusion) can give an intriguing artistic effect. Many variants came along in the 1980s and beyond, including several of Adobe Photoshop’s stylization effects. One mature example is the real-time abstraction method developed by Holger Winnemoeller at Adobe Research, which illustrates how low-level blurring and sharpening operation can create extremely compelling cartoon-like effects.

Many traditional styles gain life and vitality from the presence of visible marks made by the artist’s hands, such as brush strokes or pen strokes. In a groundbreaking paper at SIGGRAPH 1990, Paul Haeberli (then at Silicon Graphics) suggested turning photographs into paintings with a painting-like interface. Starting from a blank canvas, with each click, a small blob would appear wherever you clicked; after a while, these blobs would begin to look not unlike an artwork. He also put a Java version online. When I first came across this applet online, I was quite amazed by how such a simple procedure could produce such lovely results. The interactive pen-and-ink algorithms by Michael Salisbury, David Salesin, and others at University of Washington demonstrated tremendous visual sophistication for line drawings.

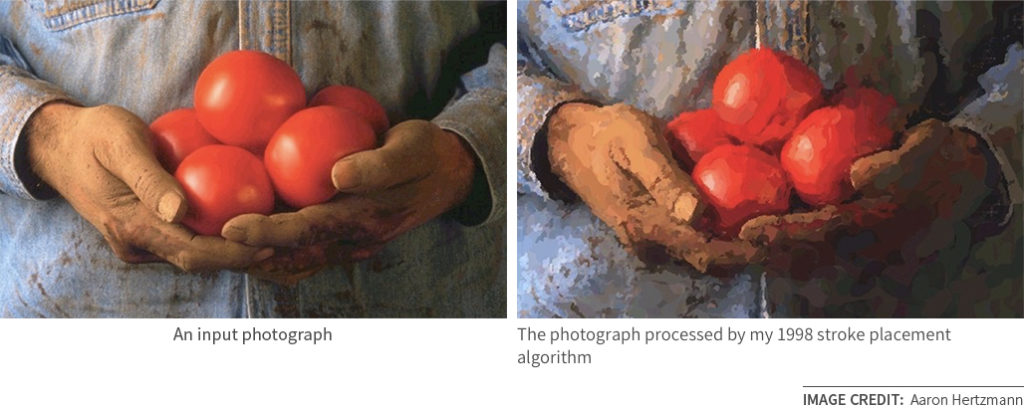

My first experiments in this space came by the sort of coincidence that, in retrospect, defines our paths. In college, I had double-majored in computer science and art, and then started grad school and began searching for a topic for my thesis. At SIGGRAPH 1997, I attended a paper presentation by Pete Litwinowicz on automatically stylizing video with brush strokes, building on Haeberli’s algorithm. (Litwinowicz later worked on the impressive Painted World sequence in the movie “What Dreams May Come.”) He implied in his talk that artists paint in the same way as his algorithm worked: placing a grid of brush strokes over an image. I thought to myself, “That’s not how artists work!” I went back to school and, left alone by my indulgent thesis advisor, implemented Litwinowicz’ algorithm, and then starting modifying it.

The resulting algorithm added various scales of brush strokes. Artists often rough out a composition with a sketch, and then fill in details. My algorithm approximates this — in a very loose sense — by starting with great big brush strokes and then adding small strokes to refine the image. I also used long curved strokes, rather than simple short strokes. This method was published at SIGGRAPH 1998, and became my first research paper and the first part of my PhD thesis. Based on some suggestions from my advisor Ken Perlin and the staff in our lab at NYU, we put together a video version and a live painting exhibit that was shown at a few art shows.

I’ve written a survey paper which covers these kinds of stroke-based algorithms in much more detail.

Energized by this initial success, I worked on making my painting algorithms more sophisticated. My main idea was to devise an optimization algorithm for stroke placement, based on my theory that the artistic process can be described as an optimization procedure (later articulated here). However, I didn’t make much progress on it, in part because the computers of the time weren’t up to this optimization. It also became too frustrating to try to write better mathematical equations to describe how artists work, or even to articulate what that should look like. It was time for a different approach.

In the next blog post, I’ll describe the development of example-based algorithms.